Roadmap to Computer Vision 🦝

Welcome to the Computer Vision Roadmap by Programming Club IITK, wherein we’ll be building right from the preliminary techniques of Image Processing and thereafter largely cover the intersection of Machine Learning and Image Processing. Thereafter, we shall be touching upon various cutting-edge techniques in GenAI and Representational Learning (specifically, GNNs) which are mildly related to the domain of CV and CNNs. We’re expecting that you’re here after completing the Machine Learning Roadmap, which covers the basics of Machine Learning, Neural Networks, Pipelines and other preliminary concepts; so in this roadmap, we assume that the explorer has that knowledge and we pick up from there. Even if you have explored Machine Learning independently, we strongly recommend you to go through the ML Roadmap once before starting this. Although all topics are not prerequisite, but most of them are.

Also do remember, in case you face any issues, the coordies and secies \@Pclub are happy to help. But a thorough research amongst the resources provided before asking your queries is expected :)

🦝 Meta-Knowledge

We started off our Machine Learning journey with the example of automating the differentiation process between a Raccoon 🦝 and a Red Panda. In the ML Roadmap we played a lot with numbers and datasets, but how do we convert the images of a Raccoon into forms which can be interpreted by our models? More importantly, how are these images represented by computers? Can we simply run the model on these images or do we require to alter the image in order to get good results? Let’s try to find the answers of these questions.

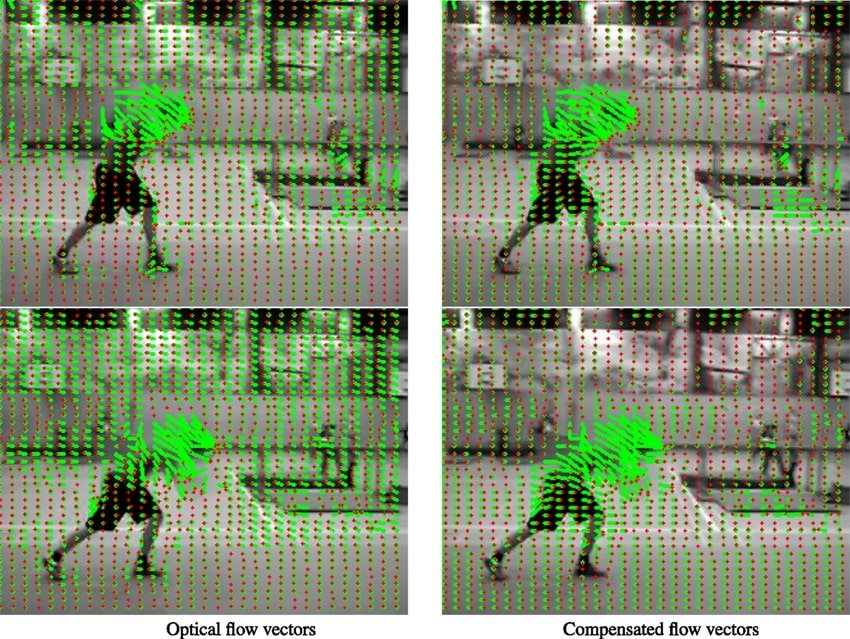

Manipulation of the mathematical representation of Digital Images in order to extract, alter, add or remove some form of information is Image Processing. When we perform some image processing algorithm/method on an image, we get image. For instance, enhancing image quality, converting a colored image to black and white image, or applying any Instagram filter counts as image processing.

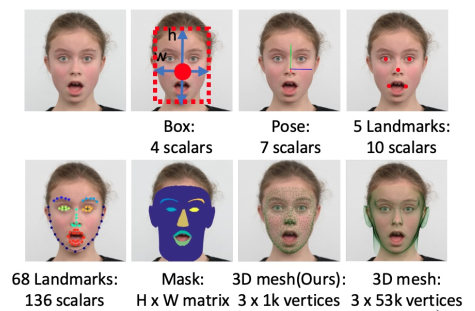

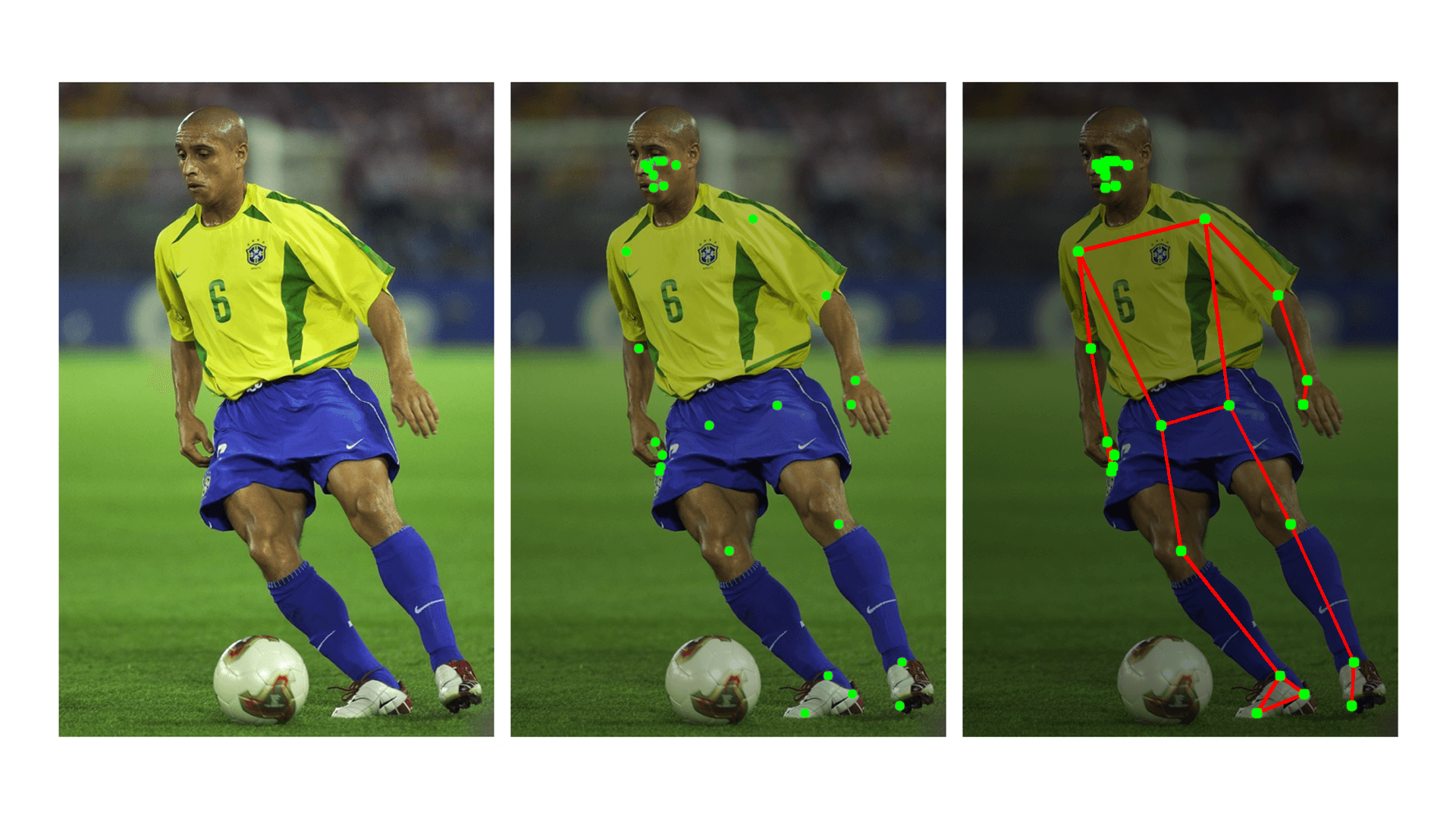

Digital Image Representation

Then what is Compute Vision? CV is a field of artificial intelligence (AI) that uses machine learning and neural networks to teach computers and systems to derive meaningful information from digital images, videos and other visual inputs—and to make recommendations or take actions when they see defects or issues. Image processing may not always utilize AI/ML, while on the other hand, CV is strictly a subset of AI. This Article by IBM provides a great introduction to CV. You can go through the following material to understand the difference between CV and Image Processing better:

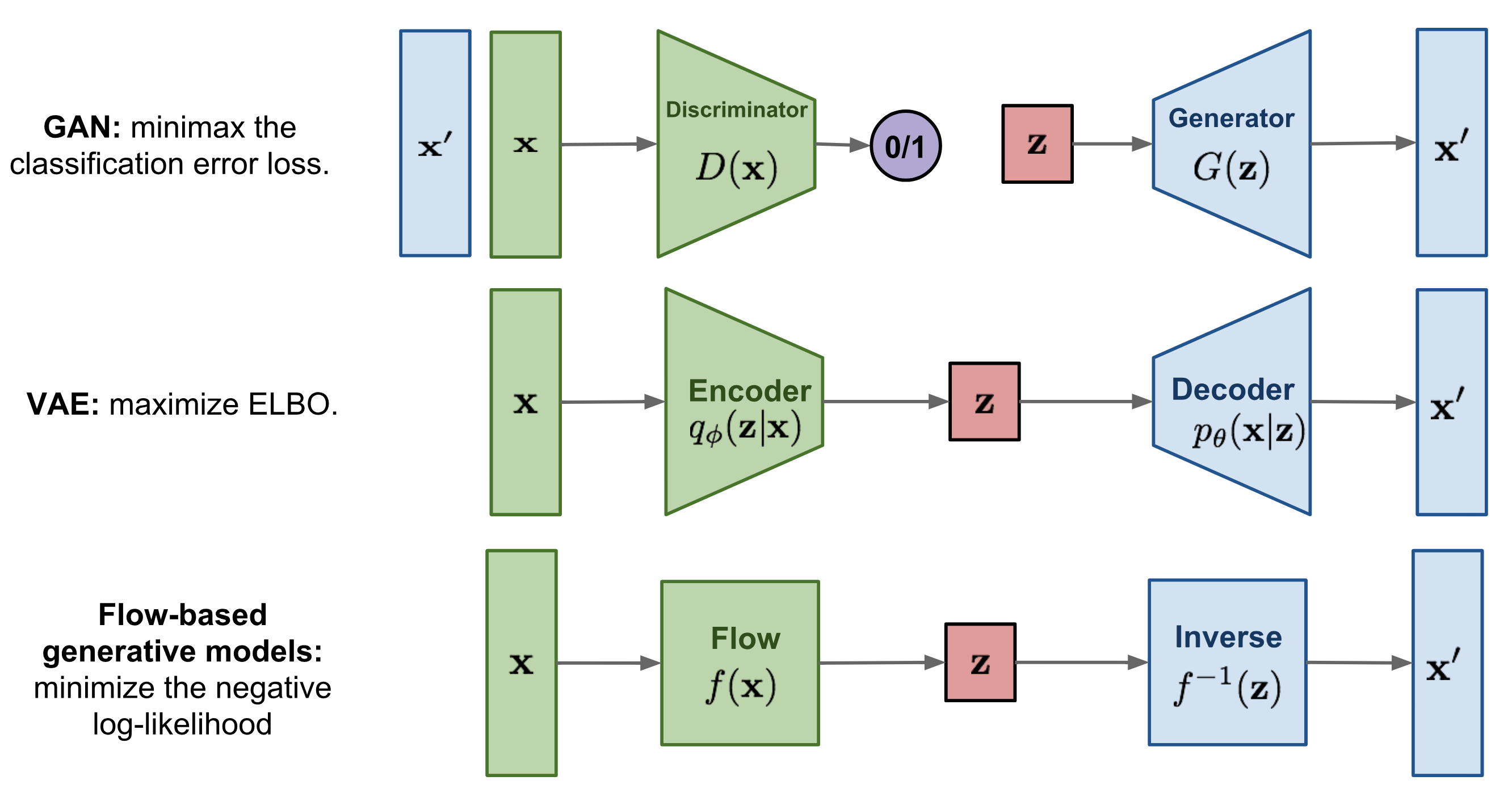

Optional Read: What is CV and Understanding Human Vision

🦝 Meta-Roadmap

This section largely focusses on how to use this roadmap in a efficient manner, and how you can play around with the same. Note that most of the Computer Vision courses or learning materials focus mostly on the Convolutional Neural Net (A special type of NN which we shall explore later) part of CV, which is an extremely small subset of what Image Processing and CV truly encompasses. It is also not very intuitive and does not help one in understanding the logic behind how object identification or classification is happening (You’d be able to classify an image as a Raccoon 🦝 or a Red Panda, but you’d not know WHAT the CNN is actually doing behind the scenes; it’s essentially a Blackbox).

In this roadmap, we start off with low level Image Processing which involves understanding the theory of Digital Images and their manipulation without the use of these backboxes and cover the same in the first two weeks. This shall hopefully provide you a decent intuition behind how things might work behind the scenes and the “logic” behind the processing. Post that, we bring Deep Learning into the picture in week 3 by introducing CNNs. The first 3 weeks MUST be followed in the prescribed order.

Post CNNs, we delve deep into the famous CNN architectures and use cases in weeks 4 and 5. You can study these two weeks in any order because they are largely independent. Note that in both the weeks, we start off by providing the non-ML approach to solving the problem at hand which builds your logic and then move on to how these problems can be solved using CNNs.

Henceforth, you can take three paths:

- Continue with the applications of Detection and Segmentation in Week 6, explore Face Detection and Recognition, Pose Estimation, Motion and Tracking.

- Switch to learning Generative Models. For this track, you can skip week 6 and directly do weeks 7 through 10; and then 12

- Explore a unique type of Neural Networks called Graph Neural Networks in week 11.

These three tracks are largely independent of each other so can be explored in any order.

🦝 Recurring Resources

Throughout the Roadmap, we’ll be constantly referring to a few course websites and lecture notes. So it’s recommended that you keep referring them and exploring the stuff we’ve not included if it seems interesting. It’s highly recommended to read this section thoroughly and keep these resources handy/at the back of your mind while you’re on this CV Journey.

- CS131 Computer Vision: Foundations and Applications: This is an introductory Computer Vision course which covers fundamental methods to process, manipulate and extract information from images. This largely covers mathematics, transformations and non-ML algorithms. I shall refer this intensively in the first 2 weeks, and then later during recognition, detection and segmentation sections. Following are some important links:

-

OpenCV Course: This is an instructive hands on course on OpenCV which consists of a series of notebooks which guide you through how to implement various Computer Vision and Image Processing methods via OpenCV library of Python. This GitHub Repository consists of all the resources of the course. This course is divided into two parts: - OpenCV: Starts off by introducing how digital images and color spaces work and goes on to explain various transformation, filters and methods like Convolutions, Thresholding, Moments etc. Then it has notebooks which cover practical applications like detection, recognition, tracking, style transfer etc. - Deep Learning CV: As the name suggests, this section provides a hands on learning approach to Deep Learning using PyTorch, Keras and TensorFlow. This also contains various projects which shall help you to apply this knowledge to real life.

👾How to use this Course? Mastering Machine Learning requires a mastery of theory, mathematics and applications. Unfortunately this course only fulfills the application part of it and does not include theory and mathematics. As we proceed through this roadmap, we’ll provide you various resources to master the theory and mathematics of various methods. Use this course in tandem with the theory provided in order to have an all round understanding. Keep referring to these notebooks everyday and going through the topics as and when they are covered in the week.

-

OpenCV Official Tutorials: The Tutorial articles on the official site of OpenCV provide a decent introduction to theory, but most importantly provide a great walkthrough of the OpenCV library, which is really important for implementing any CV project in Python. You can find the tutorials via the following link: https://docs.opencv.org/4.x/d6/d00/tutorial_py_root.html

-

Computer Vision: Algorithms and Applications Book by Richard Szeliski: This book covers almost all the topics required to have a great foundational knowledge of Image Processing, but might be a bit too advanced and extensive at times if followed along with the resources provided. You can give it a read for some, or all topics if you find learning better this way. Here’s a link to downloading the book for free: https://szeliski.org/Book/

-

Introduction to Computer Vision Course by Hany Farid, Prof at UC Berkeley: This playlist consists of concise (mostly less than 10 mins) videos which brilliantly capture the mathematics, logic and visualization of various introductory CV and Image Processing concepts, along with their implementation in python. The course is so easy to follow that if you’re not able to understand any concept via the resources provided, it’s guaranteed that a video from this playlist will help you understand it. You can find the playlist here: https://www.youtube.com/playlist?list=PLhwIOYE-ldwL6h-peJADfNm8bbO3GlKEy

- CS231n: Deep Learning for Computer Vision: This builds on CS131 by introducing CNNs, their training, architectures, Deep Gen Models and other methods. This course shall be referred week 3 onwards. Following are some important links:

- CS224W: Machine Learning with Graphs: Provides an introduction to Graphs, and Graph Neural Networks. Will be constantly referred in week 11.

- CS236: Deep Generative Models: Gold Standard Gen AI course. Shall be referred constantly from weeks 8 through 10.

- CS294-158-SP20: Deep Unsupervised Learning:

- CSE 599: Generative Models:

- Jeremy Jordan Website: This Website maintained by Jeremy Jordan has a bunch of interesting articles on various Machine Learning and Data Science topics, along with guided resources and lecture notes of various famous courses. Although this website will not be repeatedly referred, you can go through the content if you find it interesting.

Considering we are done and dusted with the prelude, let’s get started with the Computer Vision Journey 👾

🦝 Table of Contents

🦝 Week 1: Image Processing I

👾Day 1: Digital Images and Linear Algebra Review

👾Day 2: Color and Color Spaces

👾Day 3: Pixels, Filters and Convolutions

👾Day 6: Morphological Transformations and Edge Detection

👾Day 7: Fourier Transforms and Contours

🦝 Week 2: Image Processing II

👾Day 1: Key points, Image Features, Feature Descriptors

👾Day 5: Geometric Transformations and Geometry Detection

👾Day 6 and 7: Practice and Hand-on Stuff

🦝 Week 3: Convolutional Neural Networks

👾Day 1: Intro to CNNs for Visual Recognition

👾Day 2: Refresher: Loss Functions, Optimization and BackProp

👾Day 3: Pooling, FC Layers, Activation Functions

👾Day 4: Data Preprocessing, Regularization, Batch Norm, HyperTuning

👾Day 5: Data Augmentation, Optimizers Review

👾Day 6: Deep Learning Hardware and Frameworks

🦝 Week 4: Segmentation and CNN Architectures

👾Day 1: Segmentation and Clustering

👾Day 6: VGG16, VGG19 and GoogleNet

🦝Week 5: Object Recognition and Detection

👾Day 2: Object Detection by Parts

👾Day 3: InceptionNet and ResNets

👾Day 4: Region-Based CNN, Fast RCNN, Faster RCNN

👾Day 7: OCR and Visualizations

🦝Week 6: Some Important Applications

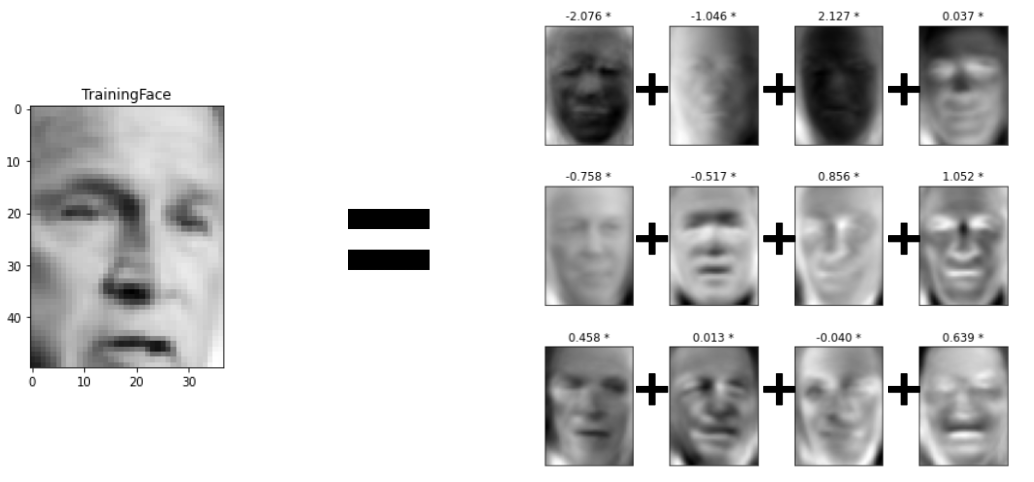

👾Day 1: Face Recognition, Dimensionality Reduction and LDA

👾Day 3: Face Recognition Models

👾Day 5 and 6: Motion and Tracking

🦝Week 7: Intersections with NLP and Visual Attention

👾Day 1: Recurrent Neural Networks

👾Day 3: Videos with CNNs and RNNs

👾Day 7: Visual QnA and Visual Dialog

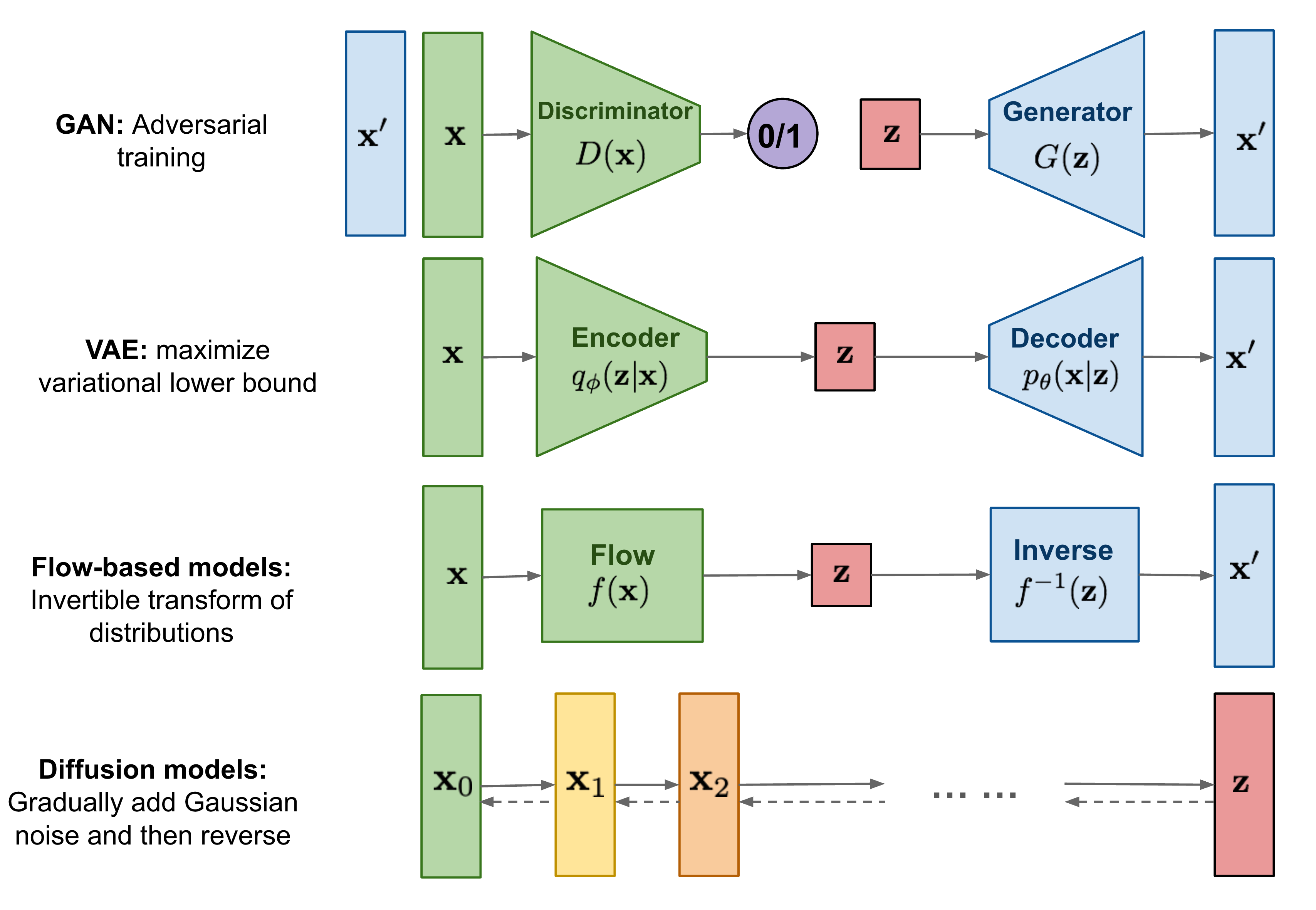

🦝Week 8: Generative Models: Prelude

👾Day 1: Introduction to Deep Generative Models

👾Day 2: Probability for Generative Models

👾Day 4: Maximum Likelihood Learning, PixelCNN, PixelRNN

👾Day 5: Latent Variable Models

👾Day 6 and 7: Variational Autoencoders

🦝Week 9: Generative Models II

👾Day 4 and 5: Normalizing Flow Models

👾Day 6: Generative Adversarial Networks I

👾Day 7: Generative Adversarial Networks II

🦝Week 10: Generative Models III

👾Day 1 and 2: Energy Based Models

👾Day 3 and 4: Score Based Models

👾Day 5: Latent Representations and Evaluation

👾Day 6: Some Variants and Ensembles

👾Day 7: Wrap Up and Further Readings

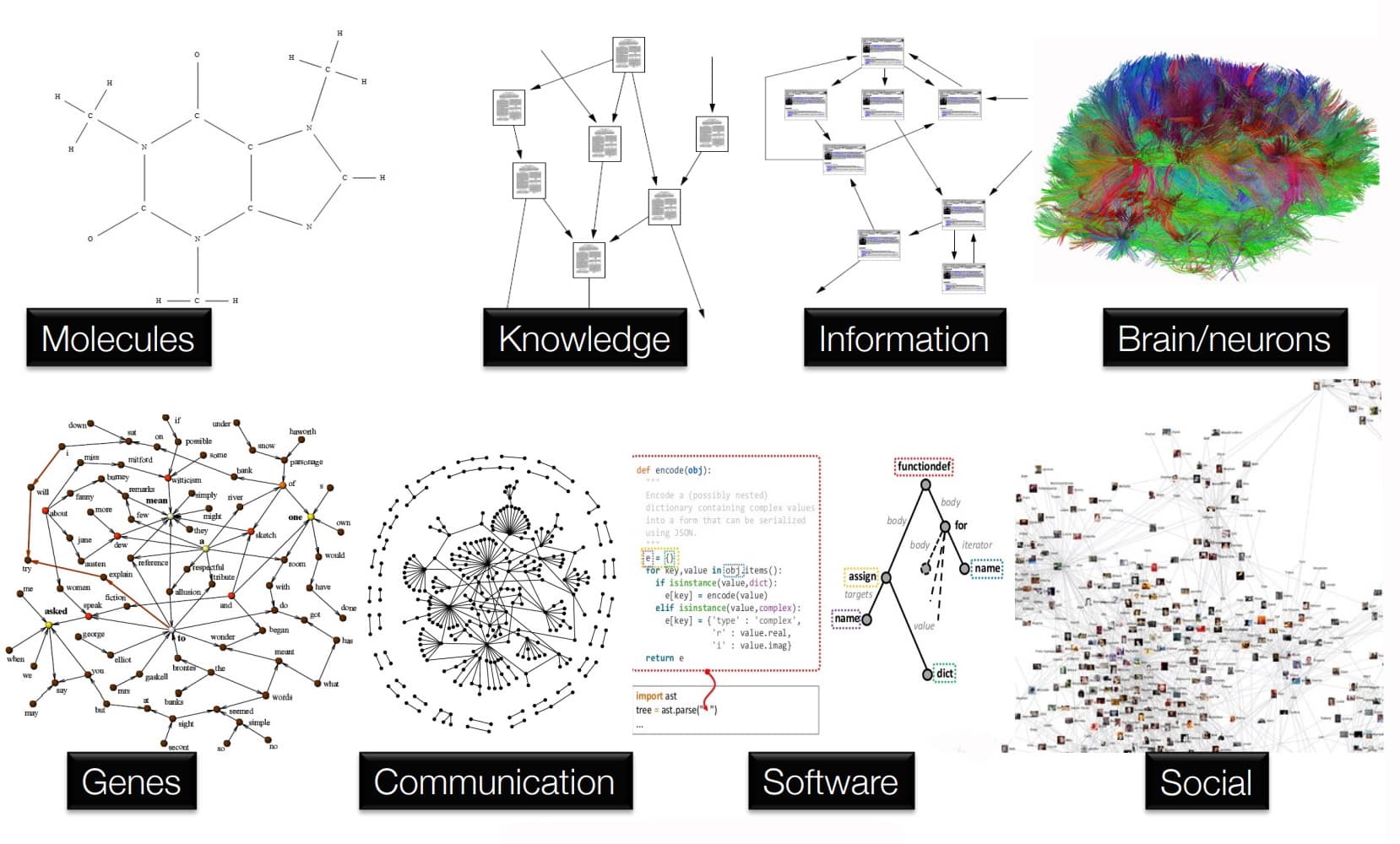

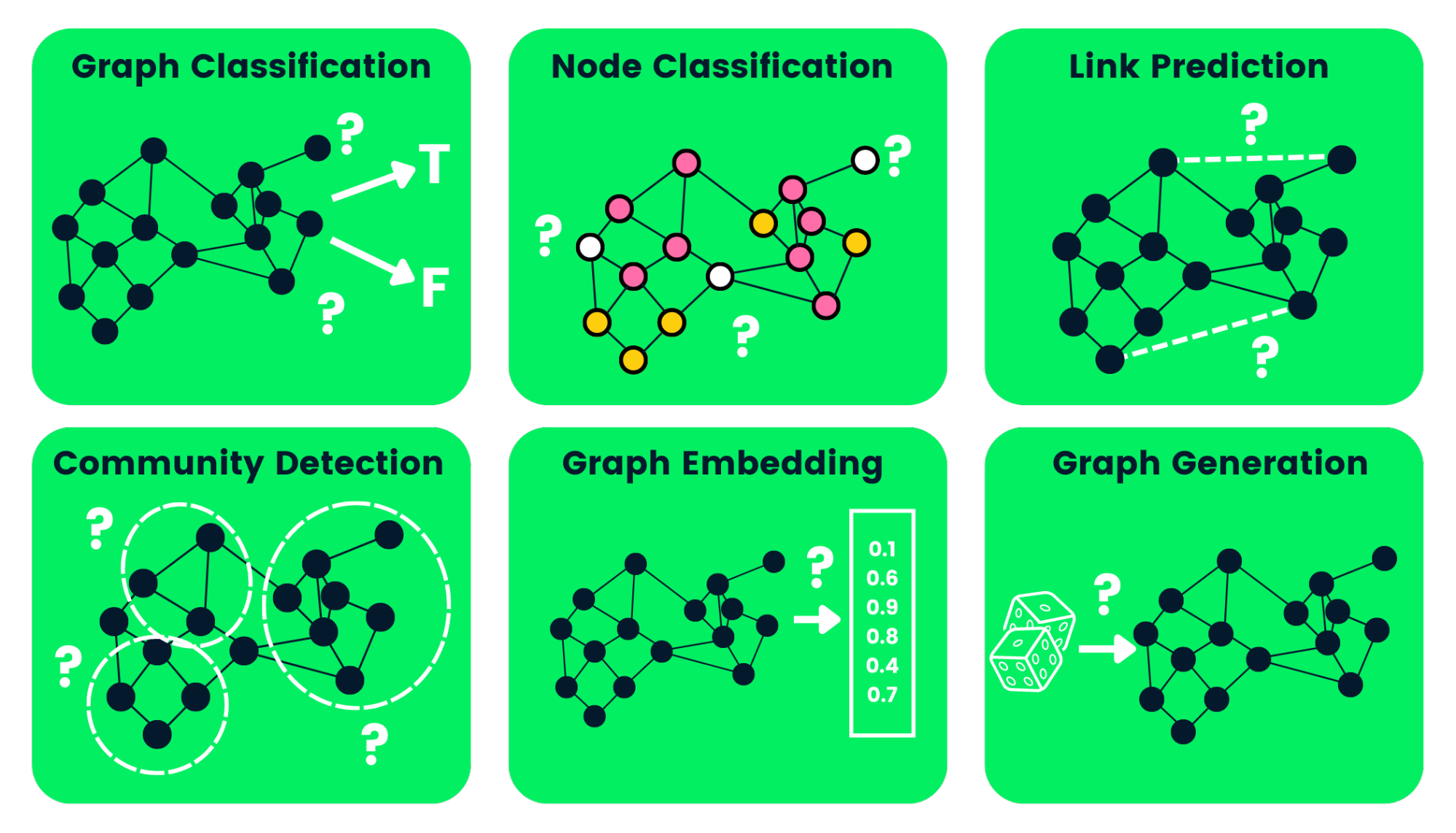

🦝Week 11: Graph Neural Networks

👾Day 1: Intuition, Introduction and Prelims

👾Day 2: Node Embeddings and Message Passing

👾Day 4: GNN Augmentations and Training GNNs

👾Day 5: ML with Heterogeneous Graphs, Knowledge Graphs

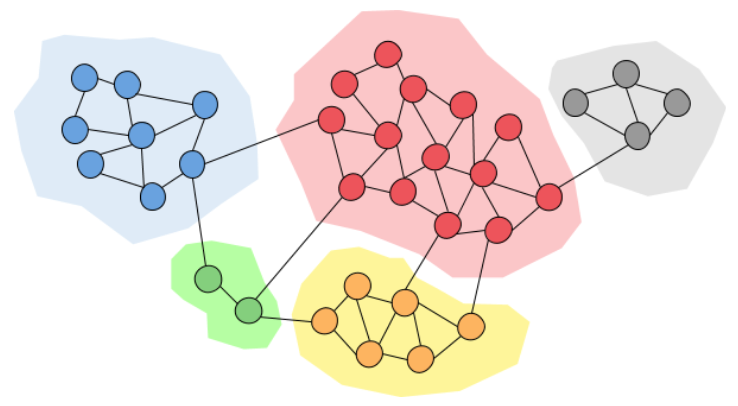

👾Day 6: Neural Subgraphs and Communities

👾Day 7: GNNs for Recommender Systems

🦝Week 12: Diffusion Models

👾Day 1: Introduction to Diffusion Models

👾Day 2: Physics and Probability

👾Day 3: Score-Based Generative Models

👾Day 4: Denoising Diffusion Probabilistic Models

👾Day 6: Implementing Diffusion Model

👾Day 7: Autoregressive Diffusion Model (ARDM)

🦝 Week 1: Image Processing I

You might want to constantly refer to the Exercises of the first chapter of CV by Szeliski, provide an intensive practice of all almost all the concepts that we shall cover in the first 2 weeks. You might want to practice simultaneously or at the end; completely upon you.

👾Day 1: Digital Images and Linear Algebra Review

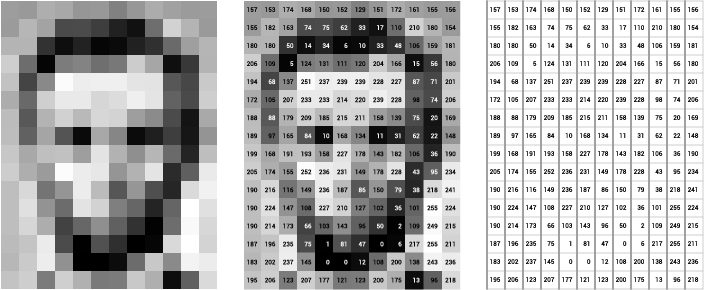

In digital world an image is a multi-dimensional array of numeric values. It is represented through rows & columns each storing data in numeric format which represents a particular region of Image. The individual cell is called pixel, the image’s dimension is represented through number of pixels in it’s height & width. To learn in detail, [click here](http://lapi.fi-p.unam.mx/wp-content/uploads/1.4-Opt_ContinousImages.pdf.

Linear Algebra will be extremely important in implementing various transformations. For a refresher, refer to the following PDFs:

👾Day 2: Color and Color Spaces

Color Space Comparison

Color is a vital element in computer vision and digital imaging, influencing how we perceive and interpret visual information. In the world of programming and image processing, color spaces play a crucial role in manipulating and analyzing images. Understanding color spaces is essential for anyone working with image data, as they provide different ways to represent and process color information.

A color space is a specific organization of colors, allowing us to define, create, and visualize color in a standardized way. Different color spaces are used for various applications, each offering unique advantages depending on the context. The most common color spaces include RGB (Red, Green, Blue), HSV (Hue, Saturation, Value), HSL(Hue, Saturation & Lightness) and CMYK(Cyan, Magenta, Yellow, and Key).

Read more about Color Space, here & more here

👾Day 3: Pixels, Filters and Convolutions

Refer to the lecture notes of fourth lecture of CS131 for today’s topics.

🐼Image Histograms

Main Resource: Image Processing from Szeliski

An image histogram is a type of histogram that acts as a graphical representation of the tonal distribution in a digital image. It plots the number of pixels for each tonal value. By looking at the histogram for a specific image a viewer will be able to judge the entire tonal distribution at a glance. Go through the following resources for understanding Histograms better:

🐼Image Filters

Filters are simply functions (linear systems) applied on an image which output a new image. The new pixel values are some linear transformations of the original pixel values. Filters are used to extract important information from the image.

Following video will provide an intuitive understanding of Filters: How do Image Filters work

Main Resource to understand Image Filters: Linear Filtering and Neighborhood Operators by Szeliski

Image with various filters applied

🐼 Convolutions

Convolutions are a fundamental operation in the field of image processing and computer vision, forming the backbone of many advanced techniques and algorithms. They are essential for extracting features from images, enabling us to detect edges, textures, and patterns that are crucial for understanding and analyzing visual data.

At its core, a convolution is a mathematical operation that combines two functions to produce a third function, expressing how the shape of one is modified by the other. In the context of image processing, this involves overlaying a small matrix called a kernel or filter on an image and performing element-wise multiplication and summation. The result is a new image that highlights specific features based on the chosen kernel.

convolutions

Watch this amazing video on convolutions

Convolutions are extensively used in various applications, including:

Edge Detection: Identifying boundaries and contours within images. Blurring and Smoothing: Reducing noise and enhancing important features. Sharpening: Enhancing details and making features more distinct. Feature Extraction: In neural networks, convolutions help in identifying and learning important features from images for tasks like classification and object detection.

Understanding convolutions is critical for anyone working with image data, as they provide the tools to transform raw images into meaningful information. In this section, we will delve into the principles of convolutions, explore different types of kernels, and demonstrate how they are applied in practical image processing tasks. By mastering convolutions, you’ll gain the ability to manipulate and analyze images with precision and efficiency.

👾Day 4: OpenCV 101

OpenCV is a library designed to offer a common infrastructure for computer vision applications and to accelerate the use of machine perception in commercial products. Its primary goal is to provide a ready-to-use, flexible, and efficient computer vision framework that developers can easily integrate into their applications.

You can download OpenCV using pip. Refer here for some Video demonstration. This is a blog on OpenCV.

Moreover, as and when you keep covering concepts, refer to the relevant notebooks of the OpenCV course provided in Recurring Resources Section to understand implementation of that concept via OpenCV

Until now we’ve covered Color Spaces, Digital Images, filters and convolutions. Go through the following tutorials to implement methods relating to these topics in OpenCV:

👾Day 5: Thresholding

Thresholding is one of the segmentation techniques that generates a binary image (a binary image is one whose pixels have only two values – 0 and 1 and thus requires only one bit to store pixel intensity) from a given grayscale image by separating it into two regions based on a threshold value. Hence pixels having intensity values greater than the said threshold will be treated as white or 1 in the output image and the others will be black or 0. Go through the following articles to understand thresholding:

Refer to notebook 9 of OpenCV Course for practical demonstration.

Thresholding

👾Day 6: Morphological Transformations and Edge Detection

🐼 Binary Image Processing

While non-linear filters are often used to enhance grayscale and color images, they are also used extensively to process binary images. The most common binary image operations are called morphological operations, because they change the shape of the underlying binary objects. Some of the well known Morphological Transformations are: Opening, Closing, Dilation, Erosion, Majority.

To get an in depth understanding of Morphological Transformations, go through the following extract from Szeliski: Binary Image Processing

For implementation of binary processing methods, go through the following article: Morphological Transformations in OpenCV

Morphological transformations

🐼 Edge Detection

Edge detection and line detection are techniques in computer vision that play a crucial role in various applications, such as image processing, object recognition, and autonomous systems. The principle behind edge detection involves identifying areas of significant intensity variation or gradients in the image. Edges often correspond to changes in color, texture, or intensity, and detecting them is crucial for tasks like object recognition, image segmentation, and feature extraction.

Canny Edge Detection

Main Theory Resource: Notes of Lecture 5 and Lecture 6 of CS131.

For Diving Deep into Edge Detection, go through the following links:

- Sobel and Canny Edge Detection

- Image Gradients in OpenCV

- Canny Edge Detection in OpenCV

- Hough Transform inn OpenCV

👾Day 7: Fourier Transforms and Contours

🐼Fourier Transforms

The Fourier Transform is an important image processing tool which is used to decompose an image into its sine and cosine components. The output of the transformation represents the image in the Fourier or frequency domain, while the input image is the spatial domain equivalent. In the Fourier domain image, each point represents a particular frequency contained in the spatial domain image.

The Fourier Transform is an important image processing tool which is used to decompose an image into its sine and cosine components. The output of the transformation represents the image in the Fourier or frequency domain, while the input image is the spatial domain equivalent. In the Fourier domain image, each point represents a particular frequency contained in the spatial domain image.

Go through the following resources for an in depth understanding and implementation of Fourier Transforms:

🐼 Contours

Contours can be explained simply as a curve joining all the continuous points (along the boundary), having same color or intensity. The contours are a useful tool for shape analysis and object detection and recognition. These are largely used in detection and matching applications. For a better understanding, refer to notebook 11 of OpenCV Tutorial and follow the link:

🦝 Week 2: Image Processing II

👾Day 1: Key points, Image Features, Feature Descriptors

🐼 Keypoints

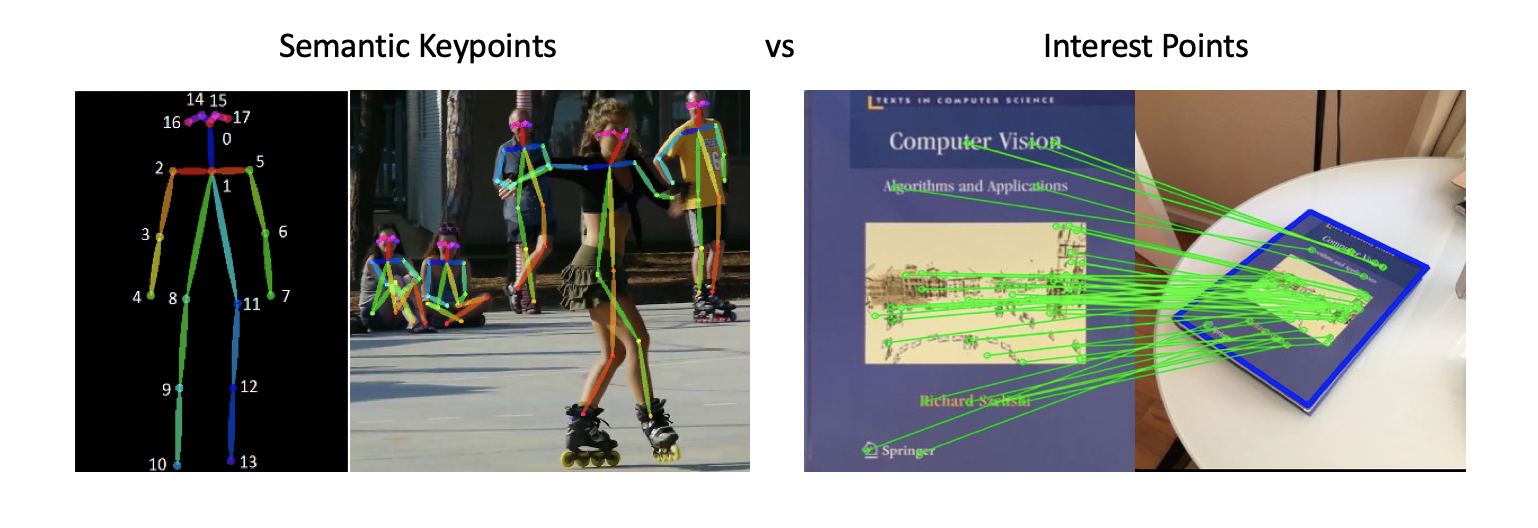

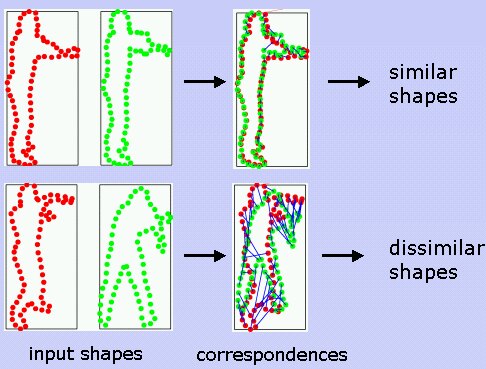

Semantic Keypoints and Keypoint Matching

Keypoints are the same thing as interest points. They are spatial locations, or points in the image that define what is interesting or what stand out in the image. Interest point detection is actually a subset of blob detection, which aims to find interesting regions or spatial areas in an image. The reason why keypoints are special is because no matter how the image changes… whether the image rotates, shrinks/expands, is translated (all of these would be an affine transformation by the way…) or is subject to distortion (i.e. a projective transformation or homography), you should be able to find the same keypoints in this modified image when comparing with the original image.

Keypoints are extremely important in Computer Vision and the detection of keypoints has various applications for instance, -time face matching, object tracking, image contouring, image stitching, motion tracking etc. To have a better understanding of keypoints, refer to this medium article and this stackoverflow discussion

🐼 Image Registration

Let’s say you have two images of the same cute Raccoon 🦝, but the orientation of raccoon in each image is different? A human brain can easily understand which point corresponds (or “aligns”) to which point of the Raccoon in both the images. But how do we attain this with Computer Vision? The answer is Image Registration.

Image registration is the process of spatially aligning two or more image datasets of the same scene taken at different times, from different viewpoints, and/or by different sensors. This adjustment is required in multiple applications, such as image fusion, stereo vision, object tracking, and medical image analysis. Go through the following article to have a better understanding of Image Registration: https://viso.ai/computer-vision/image-registration/#:~:text=Image%20registration%20algorithms%20transform%20a,tracking%2C%20and%20medical%20image%20analysis.

🐼 Robust feature matching through RANSAC

RANSAC (and variants) is an algorithm used to robustly fit to the matched keypoints to a mathematical model of the transformation (“warp”) from one image to the other, for example, a homography. The keyword here is “robustly”: the algorithm tries really hard to identify a large (ideally, the largest) set of matching keypoints that are acceptable, in the sense they agree with each other on supporting a particular value of the model. For a better understanding of RANSAC, go through the following content:

- MIT Lecture Slides

- CS131 Lecture 7 Notes: These also cover some important concepts on Keypoint Localization and Invariant Keypoints. Go through these topics as they are important for the next subsection.

🐼 Scale Invariant Keypoint Detection

Let’s say you have a video of a Raccoon 🦝 going beautifully through trash 🗑️, and you need to track the motion of the same. These applications require keypoint detections across a wide number of similar images at vastly different scales without the prior knowledge of the size of the Raccoon. For such forms of keypoint detection, we need a function which takes in the image, and irrespective of the scale of the image returns the same keypoints. These are done via various functions and methods like Average Intensity, Difference of Gaussians and Harris-Laplacian. To understand these algorithms, go through CS131 lecture 8 notes

🐼 Scale Invariant Feature Transform (SIFT) Algorithm

SIFT Algorithm flowchart

By extracting distinctive features from images, SIFT allows machines to match those features to new images of the same object. Using SIFT, you shall be able to recognize the Cute Raccoon 🦝 from any angle and orientation, even after distortions and blurring. SIFT follows a four-step procedure:

- Constructing a Scale Space: To make sure that features are scale-independent

- Keypoint Localisation: Identifying the suitable features or keypoints

- Orientation Assignment: Ensure the keypoints are rotation invariant

- Keypoint Descriptor: Assign a unique fingerprint to each keypoint

Feature mapping using SIFT

Go through CS131 lecture 8 notes, along with the following blogs in order to understand the mathematics and implementation of SIFT:

🐼 Histogram of Oriented Gradients (HOG)

Histogram of Oriented Gradients

We’ve already talked enough about Histograms and Gradients, so understanding this algorithm should not really be tough. The histogram of oriented gradients (HOG) is a feature descriptor used in computer vision and image processing for the purpose of object detection. The technique counts occurrences of gradient orientation in localized portions of an image. Go through CS131 lecture 8 notes and these blogs to learn HOG:

👾Day 2: Pyramids and Wavelets

(Day 2 shall be pretty heavy, but the next 2 days are extremely light so according to convenience you can prolly spend a bit more time with Pyramids and Wavelets and speed-run the next 2 days. )

Main Study Resource: Pyramids and Wavelets from Szeliski

Changing the scale of image (reducing and increasing it size) is so fundamental that we simply don’t try to question the intricacies and algorithms behind it and take it for granted. Today we shall largely talk about up-sampling and down-sampling images and how can pyramids and wavelets be utilized in image processing.

🐼Pyramids

Image Pyramid

Working with images with lower resolution is extremely memory, time and power efficient because we have less amount of information (to be specific, signals) to process. A common way to do this is via a combination of blurring and down-sampling; and these processes can be implemented iteratively to form a pyramid-like scheme. This method is called the method of pyramids and on the basis of the types of filter we’re using for blurring, we can have different types of pyramids. Take a look at the following videos to get a better understanding:

- Gaussian Pyramid: Largely used for display resolution, dimensionality reduction, efficient search and match etc.

- Laplacian Pyramid: For sub-band analysis, de-noising, compression

Go through this NPTEL video for a better understanding of applications of Pyramids.

Refer to the first 4 subsections of Pyramids and Wavelets from Szeliski to understand more about Interpolation (up-sampling from lower to higher resolution), Decimation (down-sampling from higher to lower resolution) and Multi-Resolution Representations.

Here is an OpenCV tutorial on how to implement Image Pyramids

🐼Sub-Band Coding

In signal processing, sub-band coding (SBC) is any form of transform coding that breaks a signal into a number of different frequency bands, typically by using a fast Fourier transform, and encodes each one independently. This decomposition is often the first step in data compression for audio and video signals.

Imagine you have a really long book on Raccoons 🦝 that you need to summarize. Instead of summarizing the whole book in one go, you might find it easier to break the book into chapters and summarize each chapter individually, for instance divide the book into subtopics like their inherent laziness, their trash-picking skills, cuteness (subjective, but I strongly agree), attempted scary faces etc. Sub-band coding works on a similar principle for signals (like audio or images).

The idea is to:

- Improve Efficiency: By breaking down a signal into smaller frequency bands, you can process and compress each part more efficiently.

- Reduce Redundancy: Different parts of a signal might contain redundant information. Sub-band coding helps to identify and remove this redundancy.

- Enhance Quality: When you process each sub-band separately, you can apply techniques that are best suited for each band, leading to better overall quality.

Sub-Band Coding is primarily used in Audio Compression, Image Compression, Speech Processing and Telecommunications. We shall majorly look at the Image Compression part of it in this section.

Go through the following video to understand Sub-Band Coding better: https://www.youtube.com/watch?v=bci7xMFVnvs

🐼Wavelets and Multi-Resolution Processing

Wavelets are a famous alternative to Pyramids, and are filters that localize a signal in both space and frequency (like the Gabor filter) and are defined over a hierarchy of scales. Wavelets provide a smooth way to decompose a signal into frequency components without blocking and are closely related to pyramids.

The fundamental difference between Pyramids and Wavelets is that traditional pyramids are overcomplete, i.e., they use more pixels than the original image to represent the decomposition, whereas wavelets provide a tight frame, i.e., they keep the size of the decomposition the same as the image.

Go through the remaining portions of Pyramids and Wavelets from Szeliski to understand how Wavelets are constructed and their applications in image blending.

👾Day 3: Resizing Algorithms

In the last section, we primarily talked about simply down-sampling and up-sampling wherein we were just scaling the length and width of the image by a factor. The issue with that is that this might lead to artifacts or loss of important features and information. For this, we use Seam Carving Algorithms to efficiently resize the image from $(m*n)$ to $(m’ * n’)$ pixels.

Human vision is more sensitive to edges, because they show a high Image Gradients, so it is important to preserve edges. But even if we remove contents from smoother areas, we’ll likely retain information. We utilize a gradient-based energy function for this kind of transformation, which detects the importance of various regions by calculating sum of absolute values of the x and y gradients.

We’ve identified which pixels are ‘important’ and which are ‘less important’. How do we remove the ‘unimportant pixels’ in a way which preserves the core information of the image. For this, we define a ‘Seam’, that is a connected path of pixels from top to bottom or left to right which minimizes the energy function.

The concept of Gradient-based Energy Function and Seam is used to reduce the size of the image, via Seam Carving Algorithm.

A similar approach can be employed to increase the size of images by expanding the least important areas of the image.

Go through CS131 Lecture 9 Notes (download the file if it does not render inline) to understand these concepts better and explore its implementation via code.

👾Day 4: Strides and Padding

Let’s take a detour from the status quo and learn some basic concepts in image processing which shall be helpful in building up to Convolutional Neural Networks.

In the realm of image processing and neural networks, strides and padding are two crucial concepts that significantly impact the behavior and output of convolutional operations. Understanding these concepts is essential for effectively designing and implementing convolutional neural networks (CNNs) and other image processing algorithms.

Strides Strides determine how the convolutional filter moves across the input image. In simpler terms, strides specify the number of pixels by which the filter shifts at each step. The stride length affects the dimensions of the output feature map.

Stride of 1: The filter moves one pixel at a time. This results in a detailed and often larger output. Stride of 2: The filter moves two pixels at a time, effectively down-sampling the input image. This produces a smaller output but reduces computational complexity. Choosing the right stride is important for balancing detail and efficiency. Smaller strides capture more detailed information, while larger strides reduce the size of the output, which can be beneficial for computational efficiency and reducing overfitting in deep learning models.

Padding Padding refers to the addition of extra pixels around the border of the input image before applying the convolutional filter. Padding is used to control the spatial dimensions of the output feature map and to preserve information at the edges of the input image.

Valid Padding (No Padding): No extra pixels are added. The output feature map is smaller than the input image because the filter cannot go beyond the boundaries. Same Padding (Zero Padding): Extra pixels (usually zeros) are added around the border to ensure that the output feature map has the same spatial dimensions as the input image. This allows the filter to cover all regions of the input image, including the edges. Padding helps to maintain the spatial resolution of the input image and is crucial for deep neural networks where preserving the size of feature maps is often desirable.

Why Strides and Padding Matter Control Over Output Size: By adjusting strides and padding, you can control the size of the output feature maps, which is crucial for designing deep neural networks with specific architectural requirements. Edge Information Preservation: Padding helps to preserve edge information, which is important for accurately capturing features located at the boundaries of the input image. Computational Efficiency: Strides can be used to down-sample the input image, reducing the computational burden and memory usage in deep learning models.

Here’s an amazing blog, make sure to check it out.

👾Day 5: Geometric Transformations and Geometry Detection

🐼 Geometric Transformations

Earlier this week, we explored as to how up-scaling, down-scaling and resizing is performed on images via various transformations. Another set of important operations are the geometric transformations like translations, skewing, rotations, projections etc, which are essentially linear transforms. Ideally, you’d think that matrix multiplication should work in such a case, right? But the problem is that underlying pixels of a screen can’t be transformed into other shapes, and they will remain squares of fixed sizes. So we need to create certain mappings such that we are able to perform these transformations, while preserving the structure of the fundamental blocks of an image: the square pixels.

This is a good time to revise key-points, feature descriptors, pyramids and wavelets because these concepts shall be constantly used in building up geometric transformations.

🐯Warping

Warping a Raccoon Image

Geometric Image Transformations are also called Warping. There are largely 2 ways to warp an image:

- Forward Mapping: In this method, each pixel in the source image is mapped to an appropriate place in the destination image. Thus, some pixels in the destination image may not be mapped. We need interpolation to determine these pixel values. This mapping was used in our point-morphing algorithm.

- Reverse Mapping: This method goes through each pixel in the destination image and samples an appropriate source image pixel. Thus, all destination image pixels are mapped to some source image pixel. This mapping has been used in the Beier/Neely line-morphing method.

Following are some good resources to understand basic warping:

Post gaining this basic intuition of Warping, go through the following resources for understanding the development of modern warping techniques, and Feature-based morphing which is an application of Geometric Transformation:

- Geometric Image Transformation Lecture Slides by Harvey Rhody, Rochester Institute of Tech

- Geometric Transformations from Szeliski

- Geometric Transformations OpenCV Tut

🐼 Contour Matching

Main Resource: Notebook 12 of OpenCV Course

Contour Matching

An important application of image processing is detecting and recognizing objects. Feature mapping is a relatively complex concept, and using it to differentiate between a square and triangle is like using the Quadratic Formula to solve $x^2 - 1 = 0$ 👾. There are methods via which we can differentiate between objects, and detect them without detecting key-points and mapping them.

🐯 Hu Moments

Image moments are a simply the weighted average of image pixel intensities. These weighted averages can be evaluated via various formulae. If we are able to define a unique moment for a shape, which is translation, scale, and rotation invariant, we will have a metric which can uniquely identify a shape. Hu Moment is such a moment that is translation, scale, and rotation invariant. TO learn more about moments and shape matching using hu moments:

- https://learnopencv.com/shape-matching-using-hu-moments-c-python/#:~:text=OpenCV%20provides%20an%20easy%20to,the%20images%20and%20use%20matchShapes

- https://cvexplained.wordpress.com/2020/07/21/10-4-hu-moments/

🐯Contour Detection and Matching

We explored contours last week, now we shall use contours to match shapes. Note that a contour can define the boundary of a shape, helping in eliminating all the features which are irrelevant in shape identification and matching. To learn about the same, follow this TDS blog

🐼 Line, Circle and Blob Detection

Main Resource: Notebook 13 of OpenCV Course

In Edge Detection, we explored the Hough Transform. Note that edge detection is fundamental to any kind of shape detection because the boundaries of shapes are comprised of edges. In this section, we shall be using Hough Transform for Line, Circle and Blob detection. Go through the following resources for the same:

- [Blob Detection Theory](https://repo.ijiert.org/index.php/ijiert/article/download/565/539/1086

- Blob Detection via DOG, DOH, Connected Components

- Hough Circle Transform OpenCV Tut

- Line, Circle, Blob Detection using Hough

- Detecting Ellipses in Images

🐼 Finding the Raccoon 🦝🥺

Main Resource: Notebook 14 of OpenCV Course

Find the Raccoon

The Cute Raccoon 🦝 is lost in an image full of hundreds of animals, and you’re missing the Raccoon🥺. For finding it, we need to spot the cute raccoon from this image wherein there are multiple animals. The raccoon is just hidden amidst all the noise and distraction, and MUST be spotted. Note, that mathematically speaking, the raccoon is nothing but a visual pattern 🤖 which can be easily spotted if we have it’s isolated image. We can just check as to which region in the larger image matches the pattern of the raccoon. This procedure in Image Processing is called Template Matching. For understanding how to find the Raccoon, go through the following links:

👾Day 6 and 7: Practice and Hand-on Stuff

Exercises of the first chapter of CV by Szeliski provide an intensive practice of all almost all the concepts covered in the last 2 weeks. You needn’t complete all the questions, you can selectively pick.

Along with these questions, you can try out all or some of these hands on projects (Don’t use CNNs or pre-trained models; the first 2 weeks were all about non black-box based image processing techniques implemented from scratch):

🐼 Face Detection and Recognition

Description: Implement a system that can detect faces in real-time from a webcam feed and recognize known individuals.

Key Concepts:

- Haar Cascades or HOG + Linear SVM for face detection.

- LBPH, Eigenfaces, or Fisherfaces for face recognition.

Project Steps:

- Capture video from a webcam.

- Detect faces in each frame.

- Recognize detected faces.

- Display names or IDs of recognized faces on the video feed.

🐼 Edge Detection and Image Filtering

Description: Develop an application that applies different image filters and edge detection algorithms to an input image.

Key Concepts:

- Sobel, Canny, and Laplacian filters for edge detection.

- Gaussian, median, and bilateral filters for smoothing and noise reduction.

Project Steps:

- Load an image from the disk.

- Apply various edge detection algorithms.

- Implement different image filtering techniques.

- Display the original and processed images side by side.

🐼 Barcode and QR Code Scanner

Description: Develop an application that can scan and decode barcodes and QR codes in real-time.

Key Concepts:

- Image thresholding and binarization.

- Contour detection and perspective transformation.

- Barcode and QR code decoding using libraries like zbar or OpenCV.

Project Steps:

- Capture video from a webcam.

- Detect and localize barcodes or QR codes in each frame.

- Decode the detected codes.

- Display the decoded information on the video feed.

🦝 Week 3: Convolutional Neural Networks

Third week onwards, we shall primarily focus on application of Deep Learning in the domain of Image Processing and Computer Vision, wherein we’ll start out with the basic CNN architecture and build our way to Generative Models

In Image Processing, we learnt about Convolution Operations, and in the ML Roadmap we explored Feedforward neural networks. It’s not tough to guess that CNNs will somehow integrate the Convolution Operation into Feedforward Neural Networks. How does this happen? Why does this work? Why not flatten the image into a vector? We shall answer these questions this week and also practically implement a basic CNN architecture.

👾Day 1: Intro to CNNs for Visual Recognition

Before we start off with theory, I’d highly recommend you to binge the following videos as they will provide you a great insight into the the workings of CNN (+the visualizations are hot, so these will help build strong intuition and visual understanding):

- CNN Visualization From Scratch

- CNN Visualized by Futurology

- 3D Convolutions Visualization

- 2D CNN on MNIST 3D visualization

🐼What are CNNs?

Convolutional Neural Networks (CNNs) are a class of deep neural networks commonly used for analyzing visual data. They are particularly effective for tasks involving image recognition, classification, and processing due to their ability to capture spatial hierarchies and patterns within images. Developed in the late 1980s, CNNs have since revolutionized the field of computer vision and have become the cornerstone of many modern AI applications. Unlike traditional neural networks, which treat input data as a flat array, CNNs preserve the spatial structure of the data by using a grid-like topology.

ConvNet

🐼Key Components of CNNs

-

Convolutional Layers: These layers apply convolution operations to the input, using learnable filters to detect various features such as edges, textures, and patterns. Each filter slides over the input image, producing a feature map that highlights the presence of specific features.

-

Pooling Layers: Pooling layers reduce the spatial dimensions of feature maps, helping to down-sample the data and make the network more computationally efficient. Common pooling operations include max pooling and average pooling.

-

Fully Connected Layers: After a series of convolutional and pooling layers, the high-level reasoning in the network is done via fully connected layers. These layers flatten the input data and pass it through one or more dense layers to perform classification or regression tasks.

-

Activation Functions: Non-linear activation functions like ReLU (Rectified Linear Unit) introduce non-linearity into the model, allowing it to learn complex patterns. Activation functions are applied after convolutional and fully connected layers.

-

Regularization Techniques: Techniques like dropout and batch normalization are used to prevent overfitting and improve the generalization capability of the network.

🐼Convolutional Layers

Convolutional layers are the fundamental building blocks of Convolutional Neural Networks (CNNs), which are designed to process and analyze visual data. These layers play a crucial role in detecting and learning various features within images, such as edges, textures, and patterns, enabling CNNs to excel in tasks like image classification, object detection, and segmentation.

A convolutional layer performs a mathematical operation called convolution. This operation involves sliding a small matrix, known as a filter or kernel, over the input image to produce a feature map. Each filter detects a specific feature, such as a vertical edge or a texture pattern, by performing element-wise multiplication and summing the results as it moves across the image.

You can read the below blog, to get further on CNNs. Introduction to CNN (Read Here).

Go through the second lecture of CS231n which gives an overview of Image Classification Pipelines. You can find the lecture slides here

👾Day 2: Refresher: Loss Functions, Optimization and BackProp

We’ll devote the second day primarily to revise concepts we’ve already covered in the ML roadmap and bring them into context with respect to CNNs by introducing SoftMax. Go through the following videos and lecture slides (you can also skim through the content if you feel you remember it):

- Lecture 3 of CS231n and its Lecture Slides

- Lecture 4 of CS231n and its Lecture Slides

👾Day 3: Pooling, FC Layers, Activation Functions

Today, we shall learn about certain CNN-specific concepts and patch them together to understand the architecture of a general CNN.

🐼 Pooling Layers

Unlike convolutional layers having kernels with trainable parameters, in pooling there’s no sort of trainable params. So if they aren’t trainable, what’s it’s use, basically pooling layers are used to compress/reduce the size of data while still having retained it’s important aspects.

There are various kinds of Pooling Layers- Max Pooling, Min Pooling, Average Pooling, etc. Here’s a short blog on Pooling Layers. If you are interested in some depths, check out this

🐼 Fully Connected Layers

After Convolutional & Pooling layers, let’s move to Fully Connected Layers. An FC layer is a Dense Neural Network Layer, which leads to a Numerical Output, achieving our task of utilizing image data for tasks like classification, object detection, etc. A fully connected layer refers to a neural network in which each neuron applies a linear transformation to the input vector through a weights matrix. As a result, all possible connections layer-to-layer are present, meaning every input of the input vector influences every output of the output vector.

🐼 Activation Functions

We’ve already explored various activation functions in the Machine Learning Roadmap. To learn about some activation functions specific to CNNs and also revise the earlier ones, follow this link

Here’s a blog on Sigmoid & SoftMax Activation functions

Here’s a research paper highlighting importance of an Activation function.

🐼 Connecting the Dots: The CNN Architecture

Go through the Fifth Lecture of CS231n and its Lecture Slides to understand how Convolutional Layers, Pooling Layers, Fully Connected Layers and Activation Functions are utilized to construct a CNN.

Here’s a great visualization explaining how to chose kernel size while building a CNN.

👾Day 4: Data Preprocessing, Regularization, Batch Norm, HyperTuning

🐼 Data Preprocessing

As learnt earlier, the images provided in the dataset might not be fit to pass through the model due to varying sizes, varying orientation of objects, noisy images, blurry image, incomplete image etc. Moreover, sometimes certain models perform better on filtered images or images in different color spaces which calls for a need to highly preprocess images before running them through the model. Methods like histogram normalization, greyscale filters, resizing, cropping, opening, closing, dilation are extremely helpful in the preprocessing step.

For understanding more about the preprocessing step, refer the first section of Lecture 6 of CS231n and its lecture slides

🐼 Regularization

As you delve deeper into Convolutional Neural Networks (CNNs), you’ll encounter challenges like overfitting and the need for more efficient and effective model architectures. Regularization techniques are essential tools to address these challenges, enhancing the performance and generalization capabilities of your CNN models.

Regularization techniques are strategies used to prevent overfitting, where a model performs well on training data but poorly on unseen data. These techniques introduce constraints and modifications to the training process, encouraging the model to learn more general patterns rather than memorizing the training data.

- Dropout:

Dropout is a technique where, during each training iteration, a random subset of neurons is “dropped out” or deactivated. This prevents neurons from co-adapting too much and forces the network to learn redundant representations, making it more robust. Typically, dropout is applied to fully connected layers, but it can also be used in convolutional layers.

- Batch Normalization:

Batch normalization normalizes the output of a previous activation layer by subtracting the batch mean and dividing by the batch standard deviation. This stabilizes and accelerates the training process, allowing for higher learning rates. It also acts as a regularizer, reducing the need for dropout in some cases.

Read this blog to grasp on above topics.

In depth implementation of Batch Normalization, along with a review of HyperTuning for CNN has been covered in the second section of Lecture 6 of CS231n and its lecture slides

👾Day 5: Data Augmentation, Optimizers Review

🐼 Data Augmentation

Image Augmentation

Sometimes, the dataset you have is not large enough, or does not capture all variations of the images leading to a pathetic accuracy score. For improving the training dataset, you can augment it by applying random transformations and filters to the same, helping in expanding the dataset and making it diverse at the same time.

Read this article to get idea of image augmentation: https://towardsdatascience.com/image-augmentation-14a0aafd0498

How to do data augmentation using tensorflow : https://colab.research.google.com/github/tensorflow/docs/blob/master/site/en/tutorials/images/data_augmentation.ipynb

No need to mug up the commands, rather learn about what possible data augmentation you can do with TensorFlow.

🐼 Optimizers Review and Second-Order Optimization

Gradient Descent, it’s variants and the optimizations on it (NAG, RMSProp, ADAM etc) utilize First-Order optimization as they only involve evaluation of first-order derivative of the loss function. Methods like the Newton Method and Quazi-Newton Methods utilize Second-Order Optimization, providing better results sometimes, but at the cost of space and time cost.

For a review of topics studied so far, their implementation, reviewing Optimizers studied in ML Roadmap and exploring Second Order Optimization, go through the Seventh Lecture of CS231n and its lecture slides

👾Day 6: Deep Learning Hardware and Frameworks

🐼CPU v/s GPU

- A CPU has Fewer cores, but each core is much faster and much more capable; great at sequential tasks

- A GPU has significantly higher number of cores, but each core is much slower and “dumber”, making it ideal for parallel tasks

Deep Learning is simply a large number of Linear Transformations (and a few non-linear as well), and a major reason for high compute is not the compute required for a single, or a sequence of operations, but the sheer scale of the number of independent operations performed. In order to tackle this, we can parallelize the independent tasks, and assign less compute to individual processes rather than allocating a huge compute on a single process or a sequence of dependent processes. This makes training DL models on GPU much more efficient.

🐼Computational Graphs DL Frameworks

The most relevant DL Framework now a days are Tensorflow and PyTorch. Caffe, which is a framework by Facebook is largely outdated and not used.

For learning more about DL Hardware, Tensorflow and PyTorch, go through the 8th lecture of CS231n and its lecture slides

Refer to these notes for an overview of the DL Hardware and Frameworks

This concludes the theoretical part of Introduction to Convolutional Neural Networks.

👾Day 7: Hands on Stuff

It’s time to get hands on some Practical Implementations.

Your task is to create a model for “Automatic Covid disease detection by Chest X-ray Images through CNN model”.

Description: You are given a dataset of X-rays images. Dataset is divided into train and test data further divided into three categories - Normal , Covid and Viral Pneumonia. You need to create a common directory for train and test images (hint: you need to import os library for this, look on internet for working) then resize the images and normalize data if needed, Split train data into train data and validation data. Convert categorical data into numerical data. You can also use ImageDataGenerator function from Tensorflow Library. Then Build a CNN model and fit the model on train data, validate the model and play with the hyperparameters for eg. no of layers, no. of filters, size of filters, padding, stride. You can also try different optimizers and activation functions. Also plot the your results for eg, loss vs epochs , accuracy vs epochs for train and validation data. You are expected to use Google Colab for this task.

DATASET: Here’s Dataset.

🦝 Week 4: Segmentation and CNN Architectures

👾Day 1: Segmentation and Clustering

Main Resource for the day: Notes of Lecture 10 of CS131. Constantly refer them while covering the subtopics.

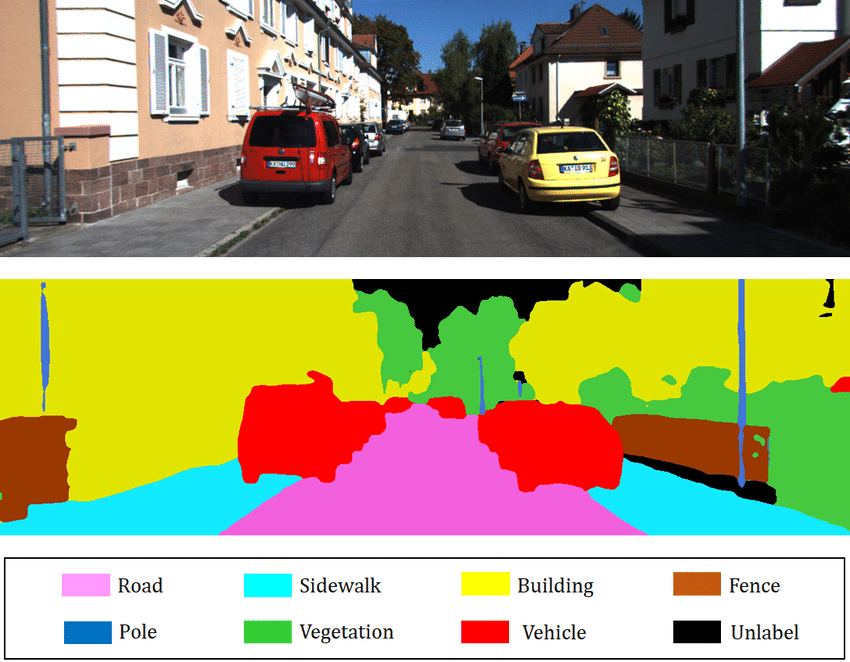

🐼 Segmentation

Image Segmentation

Image segmentation refers to the task of dividing an image into segments where each pixel in the image is mapped to an object. This task has multiple variants such as instance segmentation, panoptic segmentation and semantic segmentation. In simpler terms Image segmentation looks at an image and assigns each pixel an object class(such as background, trees, human, cat etc.). This finds several use cases such as image editing, background removal, autonomous driving and whatnot. it differs from object detection as this technique creates a mask over the object rather than a bounding box.

It is majorly divided into 3 types:

- Semantic Segmentation

- Instance Segmentation

- Panoptic Segmentation

Go through this article for a refresher on Image Feature Extraction, as they will be helpful in the following weeks.

Go through the following articles to understand Segmentation better:

- Intro to Image Segmentation

- Applications and Types of Image Segmentation

- Image Segmentation and its Types

- Image Segmentation Techniques

- Intersection over Union metric for segmentation evaluation

🐼 Clustering

We’ve already covered most of the Clustering algorithms in Machine Learning Roadmap. Here, we shall explore how clustering is used in image processing and CV.

Image Segmentation is the process of partitioning an image into multiple regions based on the characteristics of the pixels in the original image. Clustering is a technique to group similar entities and label them. Thus, for image segmentation using clustering, we can cluster similar pixels using a clustering algorithm and group a particular cluster pixel as a single segment.

Go through the following articles for understanding the role of clustering in image segmentation:

🐼Mean Shift for Segmentation

Main resource for the subtopic: First Section of Notes of Lecture 11 of CS131

Mean shift is a mode-seeking clustering algorithm widely used for the purposes of segmentation and searching for regions of interest in an image. This technique involves iteratively moving data points towards regions of maximum density, using a search window and a kernel. The aim is to determine both the extent of the search and the way in which the points are moved. The density of points is evaluated in a region around a point defined by the search window. For a better understanding, go through the following videos:

👾Day 2: Types of Segmentation

Today, we shall explore various types of segmentations in depth:

🐼Semantic Segmentation

Semantic Segmentation

Semantic segmentation tasks help machines distinguish the different object classes and background regions in an image.

- For understanding the concept and theory, refer to this article by IBM

- For understanding CNN architecture for Semantic Segmentation, refer to this blog by Jeremy Jordan.

- Famous Semantic Segmentation Techniques and their implementation

- Paper on Fully Convolutional Networks for Semantic Segmentation: This website contains the original research paper, as well as various GitHub Repositories with implemented code.

🐼 Instance Segmentation

Instance Segmentation

Instance segmentation, which is a subset of the larger field of image segmentation, provides more detailed and sophisticated output than conventional object detection algorithms. It predicts the exact pixel-wise boundaries of each individual object instance in an image. More on instance segmentations:

- Introduction to instance segmentation

- Difference between instance and semantic segmentation

- Instance Segmentation Algorithms

- Paper on Mask R-CNN for Object Instance Segmentation: Also contains github repos containing implementations of the papers

🐼 Panoptic Segmentation

Types of Segmentation

Panoptic Segmentation is a computer vision task that combines semantic segmentation and instance segmentation to provide a comprehensive understanding of the scene. The goal of panoptic segmentation is to segment the image into semantically meaningful parts or regions, while also detecting and distinguishing individual instances of objects within those regions. In a given image, every pixel is assigned a semantic label, and pixels belonging to “things” classes (countable objects with instances, like cars and people) are assigned unique instance IDs. More on Panoptic Segmentation:

👾Day 3: Transfer Learning

🐼 What is Transfer Learning

- Transfer learning is a machine learning technique where a model trained on one task is reused or adapted as the starting point for a model on a different but related task.

- It leverages knowledge gained from the source task to improve learning on the target task, especially when labeled data for the target task is limited.

- Benefits include reduced training time and the ability to achieve better performance on the target task with less data.

- Common in applications like natural language processing, computer vision, and speech recognition.

- Techniques include fine-tuning existing models, feature extraction, and domain adaptation.

An exercise which will teach you how to use transfer learning in your projects using TensorFlow.

🐼 General Resources for CNN Architectures

In the next few days, we’ll try to understand various CNN Architectures, their evolution and applications. Some of the general resources which cover the same are given below:

- CS231n Lecture 9 and its Lecture Notes (Don’t get baffled by the number of pages lmao 🦝, most of the slides contain images and flowcharts)

- ConvNet Lecture Notes

- CNN Architecture Notes by Aman

You don’t need to cover these resources this week 👾, these are just reference materials for the next few weeks.

👾Day 4: LeNet-5 and AlexNet

Before starting, watch this video to understand the architectures of ConvNets and their evolutions.

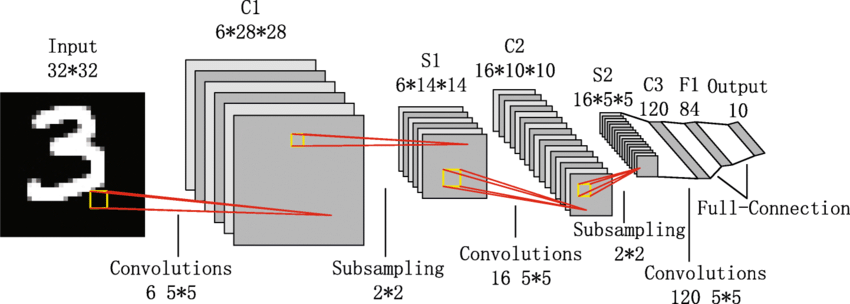

🐼 LeNet-5

LeNet-5 Architecture

The LeNet-5 Architecture was the very first ConvNet Architecture to be developed and implemented. It’s salient features are:

- Every convolutional layer includes three parts: convolution, pooling, and nonlinear activation functions

- Using convolution to extract spatial features (Convolution was called receptive fields originally)

- The average pooling layer is used for subsampling.

- ‘tanh’ is used as the activation function

- Using Multi-Layered Perceptron or Fully Connected Layers as the last classifier

- The sparse connection between layers reduces the complexity of computation

For understanding the model better, take a look at this and this article.

Try to read and implement this research paper on Document Recognition via LeNet.

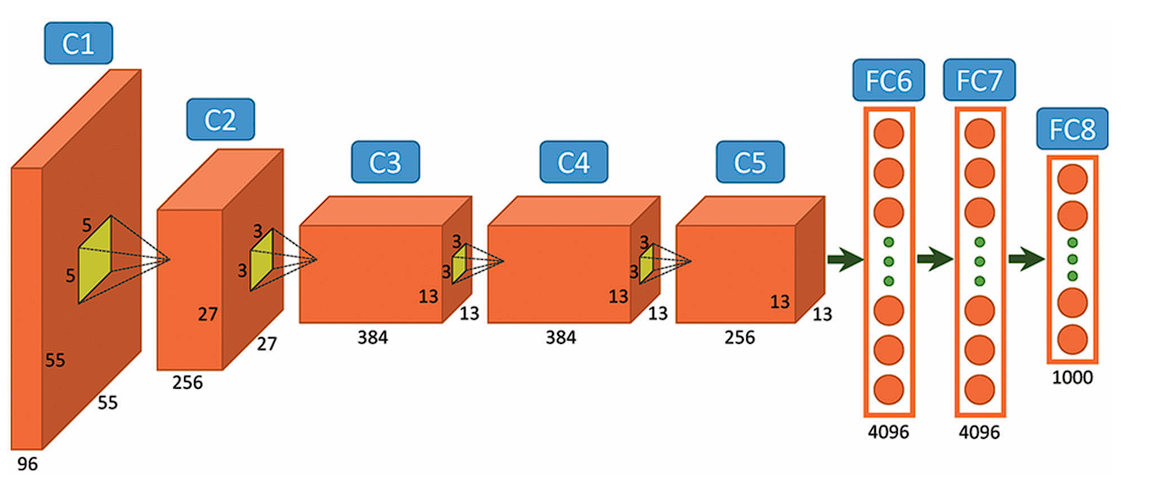

🐼 AlexNet

AlexNet Architecture

AlexNet was one of the first models to leverage the computational power of GPUs extensively. The model was trained on two GPUs, which allowed for faster training times and handling of large datasets like ImageNet. This network was very similar to LeNet-5 but was deeper with 8 layers, with more filters, stacked convolutional layers, max pooling, dropout, data augmentation, ReLU and SGD.

The article also contains code implementation of AlexNet, Brownie Points for understanding and implementing it.

👾Day 5: ZFNet

- The architecture is a refined version of AlexNet, focusing on improving performance by adjusting hyperparameters and exploring the learned features in greater depth.

- ZFNet retained basic architecture of AlexNet but made some architectural adjustments,particularly in the first few layers.

- AlexNet used 11x11,5x5 and 3x3 filter sizes while ZFNet used 7x7 filter size in the first layer only and 3x3 in the latter layers only. This reduced the training compute.

- There is stride of 4 in the first layer of AlexNet while in ZFNet there is stride of 2 used.

- AlexNet used Local Response Normalization while ZFNet used Local Contrast Normalization

- Utilizes a Deconvolutional Network to visualize the learned features and understand the workings of convolutional layers.

Read this article on ZFNet to see how it improves and builds upon AlexNet.

👾Day 6: VGG16, VGG19 and GoogleNet

🐼 VGG16 and VGG19

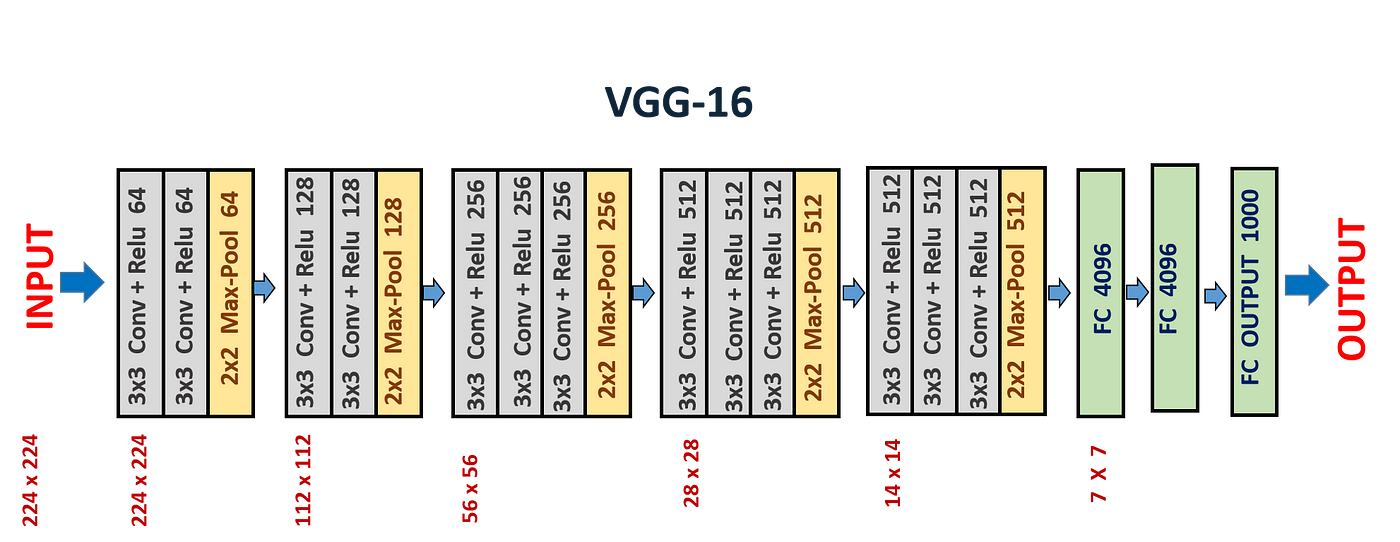

VGG16 Architecture

- VGG16 and VGG19 were introduced by the Visual Geometry Group (VGG) at Oxford University.

- These architectures were presented in the paper Very Deep Convolutional Networks for Large-Scale Image Recognition and stood out in the ILSVRC 2014.

- VGG16 and VGG19 are known for their simplicity and depth, consisting of 16 and 19 layers, respectively thus giving their names.

- It was recognized by Oxford Researchers that the operation performed by a $55$ kernel is same as the operation performed by 2 $33$ kernels with a pooling layer in the middle. So rather than increasing the kernel size, they only used $3*3$ kernels, but increased the depth of the model. This significantly increased the accuracy of the model.

For understanding the VGNet Architectures, go through this article

Post completing VGNet, go through this GFG Blog which summarizes and compares all the ConvNet Architectures studied to far.

👾Day 7: U-Net

U-Net Architecture

- The architecture is named U-Net because of its U-shaped structure, consisting of an encoder (down-sampling path) and a decoder (up-sampling path).

-

U-Net has a symmetric architecture with an encoder (contracting path) and a decoder (expanding path). This allows it to effectively capture and utilize both local and global contextual information.

- Read this article on UNET Architecture to get a deeper understanding.

- Follow this article and implement image segmentation using UNet by yourself.

- Paper on U-Net implementation for Biomedical Image Segmentation: This site contains a research paper on U-Net Implementation along with the github repositories with code implementation. Try to go through the paper and understand its implementation

🦝Week 5: Object Recognition and Detection

In the previous week, we learnt about Segmentation and its various types. This is a good time to introduce you to the large categories of applications of Computer Vision and the differences between them. CV has primarily 4 applications: Detection, Recognition, Segmentation and Generation.

Object recognition is simply the technique of identifying the object present in images and videos. In Image classification, the model takes an image as an input and outputs the classification label of that image with some metric (probability, loss, accuracy, etc). Object detection aims to locate objects in digital images. Image segmentation is a further extension of object detection in which we mark the presence of an object through pixel-wise masks generated for each object in the image.

To understand the differences better, go though the following articles:

- Detection v/s Recognition v/s Segmentation by GFG

- Kaggle article on Recognition v/s Detection v/s Segmentation

👾Day 1: Object Recognition

An object recognition system finds objects in the real world from an image of the world, using object models which are known a priori. This task is surprisingly difficult. Humans perform object recognition effortlessly and instantaneously. You’d be able to recognize a cute raccoon 🦝 instantaneously without any efforts, but for a computer to learn it and then recognize it is a largely complex task.

Recognizing a Cute Raccoon

First, it is important to understand Object Recognition Model pipelines and system design, for it involves various complex processes like Feature Detection, Hypothesis formation, Modelbase and Hypothesis verification. We’ve already studied the Feature Detection process, and how does the hypothesis function look like for a general CV task. In this week, we shall explore specific hypothesis for Object Recognition and Classification. For a foundational understanding of the pipelines and hypothesis, go through the following Chapter: https://cse.usf.edu/~r1k/MachineVisionBook/MachineVision.files/MachineVision_Chapter15.pdf)

Image Recognition Pipeline

We’ve learnt about K Nearest Neighbor Algorithm and how we can use it for segmentation. Note that KNN is a versatile algorithm for classification, and hence can be used in object recognition as well. To understand its implementation in Object Recognition use case and the problems with it, go through Notes of Lecture 11 of CS131

👾Day 2: Object Detection by Parts

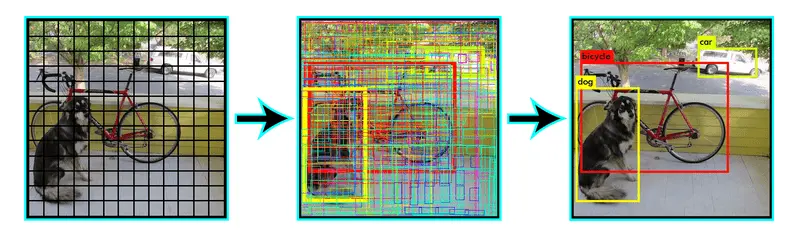

🐼 Introduction to Object Detection

Object detection is a technique that uses neural networks to localize and classify objects in images. This computer vision task has a wide range of applications, from medical imaging to self-driving cars. As opposed to detection, recognition also involves localization and involves construction of bounding boxes around specific objects, and detection of multiple image classes from same image.

For a basic introduction to Image Detection, go through the following articles:

🐼 Object Detection by Parts

Previously, we learnt about methods for detecting objects in an image; in this section, we shall learn about methods that detect and localize generic objects in images from various categories such as cars and people.

Note, that Object Detection is very similar to Classification and Recognition in the sense that mostly any Detection problem can be converted into a classification problem by adding additional steps like Image Pyramid, Sliding Window and Non-Maxima Supression.

Go through the notes of Lecture 15 and Lecture 16 of CS131 for this topic.

🐯 SlidingWindow Detector

Sliding Window Pipeline

In object detection problems, we generally have to find all the possible objects in the image like all the cars in the image, all the pedestrians in the image, all the bikes in the image, etc. To achieve this, we use an algorithm known as Sliding window detection.

Let’s say we wish to recognize all the cute raccoons 🦝 from an image of a forest. A sliding window is a fixed-size rectangle that slides from left-to-right and top-to-bottom within an image. We will pass a sliding window through the image of the forest, and at each stop of the window, we would:

- Extract the ROI

- Pass it through our image classifier

- Obtain the output predictions

For understanding how this works in detail and how to implement Sliding Window, go through:

- Conv Implementation of Sliding Implementation

- Sliding Window by Kaggle

- Short Video on theory behind Sliding Windows

- Lane Detection using Sliding Windows Python Implementation

🐯 Dalal and Triggs

Dalak and Triggs suggested using Histograms of Oriented Gradients, along with Support Vector Machines for the purposes of Image Detection, specifically Human Detection and Face Recognition. You can find the original paper here. For a visual understanding, go through the following videos:

🐯 Deformable Parts Model (DPM)

The simple sliding window detector is not robust to small changes in shape (such as a face where the eyebrows are raised or the eyes are further apart, or cars where the wheel’s may be farther apart or the car may be longer) so we want a new detection model that can handle these situations.

- Go through notes of Lecture 15 of CS131.

- Simultaneously watch the following short videos: DPM intro, DPM for rigid bodies, DOM results

You can then optionally go through Lecture 16 of CS131, which provides modern trends, methods for improving accuracy etc.

👾Day 3: InceptionNet and ResNets

Refer to the relevant parts of the following resources for GoogleNet (InceptionNet) and ResNets:

- CS231n Lecture 9 and its Lecture Notes (Don’t get baffled by the number of pages lmao 🦝, most of the slides contain images and flowcharts)

- ConvNet Lecture Notes

- CNN Architecture Notes by Aman

🐼 InceptionNet

InceptionNet Architecture Logic

IneptionNet ,developed by google, achieved a huge milestone in CNN classifiers when previous models tried to improve the performance and accuracy by just adding more and more layers. The Inception network, on the other hand, is heavily engineered. It uses a lot of tricks to push performance, both in terms of speed and accuracy.

its most notable feature is the inception modules that it uses. These modules are an arrangement of 1x1,3x3,5x5 convolution and max-pooling layers that aim to capture features on different ‘levels’. These levels refer to the different sizes an object can be in an image

Read this article to learn more about the inception net and its different versions.

🐼 ResNets

ResNet Architecture

ResNet or Residual Networks is similar to Inception Net in a sense because both aimed to solve the problem of CNN using more and more layers to try to get better efficiency. This architecture uses the residual blocks that work in a similar way to RNNs. They add a weighted part of the input to the output of the convolutional layer in order to mitigate the loss of information. Given below is an image of a residual block.

Read this article to learn more about residual networks.

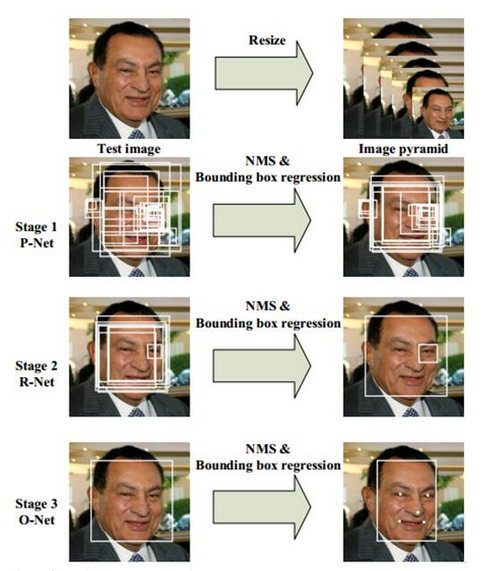

👾Day 4: Region-Based CNN, Fast RCNN, Faster RCNN

R-CNN Procedure

Go through 11th Lecture of CS231n and its lecture notes for a refresher on Detection and Segmentation, and a detailed explanation of R-CNN and its optimized versions.

Region-Based-CNN(R-CNN),introduced in 2013, was one of the first successful methods for object detection using CNNs. It used multiple networks to propose regions with possible objects and then passed these regions thrugh other networks to produce confidence scores.But there was a problem with this model, it was slow ,as it fed each proposed region(read the given article for more information) individually through the CNN.

Improving upon this, came the Fast R-CNN in 2015,it solved some of the problems by eliminating the need to pass multiple regions, it rather passed the whole image.

Finally the Faster-RCNN was proposed to improve the Fast-RCNN .It did so by changing the method it used to search the probable regions. It was around 20 times faster than the original R-CNN.

🔎 Read this article to review your understanding about R-CNN, Fast R-CNN, Faster R-CNN and YOLO(discussed later).

👾Day 5: RoboFlow and YOLO

🐼 RoboFlow

Roboflow is one of the most useful platforms when it comes to computer vision. It provides you with basically everything essential for computer vision and more ranging from Data Management, Model Training, Deployment and Collaboration.

- Data Management - Roboflow facilitates data annotation, preprocessing, and organization, including tasks like image labeling (bounding boxes, segmentation), dataset management, and version control.

-

Model Training - Users can train custom computer vision models or utilize pre-trained models for tasks such as object detection and image classification. It supports popular frameworks like TensorFlow and PyTorch.

-

Deployment - The platform enables easy deployment of trained models across various environments, including mobile devices, web applications, and edge devices. Tools are available for optimizing model size and latency.

- Collaboration - Teams can collaborate effectively on projects through role-based access control and version control for datasets and models.

To get a more comprehensive idea of what roboflow can do take a look at this video and follow it through for a quick implementation of a project.(for a more interesting approach, try to make a project of your own with a custom dataset)

Also check out this official blog and this playlist by RoboFlow themselves.

Roboflow can be used to implement YOLO, one of the most useful models around in the domain of computer vision. Let us learn more about it.

🐼 YOLO

YOLO

YOLO (stands for You-Only-Look-Once), is a series of computer vision models that can preform object detection, image classification, and segmentation tasks. I would say that YOLO is a jack of all trades in computer vision. YOLO has seen constant development over the years starting with the first YOLO model YOLOv1 to the current latest YOLOv10. It is a single shot algorithm that looks at the image once and predicts the classes and bounding boxes in a single forward pass, this makes YOLO much faster than other object detection models. This single stage architecture also aids in the training process making it more efficient than others

Here’s a simplified breakdown of how it works. Image is divided into a grid and split into cells. Each cell predicts bounding boxes for objects it might contain and then features are extracted. Each cell analyzes the image features within its area using CNNs. These features capture the image’s details. Bounding boxes and confidence scores are predicted. Based on the extracted features, the model predicts-bounding box coordinates(if multiple overlapping bounding boxes are predicted for the same class then yolo performs NMS to eliminate bounding boxes) and confidence score.

- Non-Maxima Suppression (NMS): If multiple cells predict bounding boxes for the same object, NMS selects the most confident one and discards overlapping or low-confidence boxes. Here is a video to get an idea about NMS

🔎 Read this article to review your understanding about R-CNN, Fast R-CNN, Faster R-CNN and YOLO.

👾Day 6: Hands on YOLO

Go through this article from machinelearningmastery for an overview of whatever we’ve studied this week and a list of important RCNN and YOLO papers, and Code projects which you can implement.

For a comparative analysis of Image Detection algorithms available at present, go through this article

🔎 Implement YOLOv8 by following this video and create a project for yourself. If you are done with this try to do Object tracking on a video with yolo.

👾Day 7: Optical Character Recognition and Visualizations

🐼OCR

OCR, short for Optical Character Recognition, is a technology used to detect and convert the text contained in images(such as scanned documents, photos of signs, or screenshots) into machine-readable text. This enables computers to analyze the content within images, making it searchable, editable, or suitable for various other applications. There are a lot of python libraries and models for OCR, and to get started lets use EasyOCR..

First install the requirements

pip install opencv-python

pip install easyocr

Here’s the code

import easyocr

reader = easyocr.Reader(['en']) # specify the language

result = reader.readtext('image.jpg')

for (bbox, text, prob) in result:

print(f'Text: {text}, Probability: {prob}')

There are many more OCRs available on the internet such as KerasOCR, tesseract OCR, PaddlePaddle. Try them out on your own.

🐼Visualizations

The issue with ConvNets is that it’s extremely tough to figure out what’s going on under the hood and how the neural network is actually learning and identifying patterns and matching them. Scientists have attempted to understand this and create various visualizations of the same, but the understanding is still somewhat incomplete. Go through the 12 Lecture of CS231n and its lecture notes for these visualization, and other miscellaneous topics.