Containerization and Scaling: From Zero to Millions (and Beyond!)

Ever wondered how apps like Instagram, Netflix, or even your favorite game handle millions of users at once? It’s not like they were born that way, handling massive traffic right from the start. They scaled. And one of the unsung heroes behind this magical scaling journey is something called containerization. Sounds fancy, right? But don’t worry, it’s simpler than it seems, and we’re here to break it down for you. Let’s dive in.

What’s the Big Deal with Containers?

Picture this: You’re moving houses. Instead of tossing your stuff loosely into the truck (chaos!), you pack everything into neatly labeled boxes. These boxes are easy to stack, carry, and unpack without the risk of losing or mixing things. That’s exactly what containers do for apps. They bundle an app’s code along with everything it needs to run, like libraries, runtime, and dependencies, into a neat little package. This package works anywhere, whether it’s on your laptop, a giant server, or even in the cloud.

From a technical standpoint, containers rely on OS-level virtualization. Tools like Docker use OS-level virtualization with tricks like cgroups and namespaces. Think of invisible fences keeping each container’s stuff separate and safe. This isolation ensures that containers don’t interfere with each other, even when running on the same host machine.

But you might be wondering, “Didn’t we already have something similar with Virtual Machines (VMs)? Why the sudden obsession with containers?”

Let’s spill the tea.

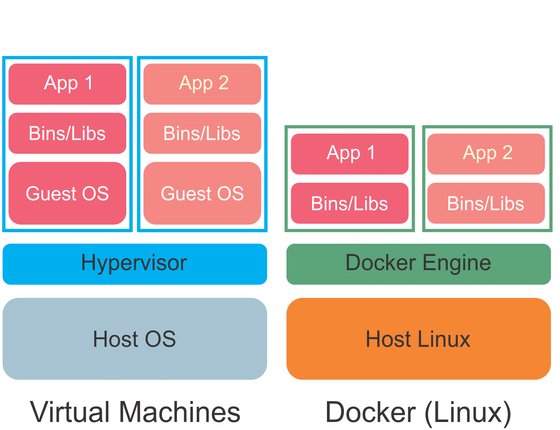

Containers vs. Virtual Machines: The Ultimate Showdown

You might ask: “Why not just use virtual machines (VMs)?” Let’s break it down:

| Feature | Containers (Apartments) | VMs (Full Houses) | |

|---|---|---|---|

| Weight | Super lightweight: no need for their own OS. They share the host OS, saving tons of space. | Heavyweight: each VM carries its own OS, utilities, and all the baggage. | |

| Startup Time | Blink-and-you’ll-miss-it fast! Containers are ready in seconds. | Slow and steady: VMs take minutes to boot up. | |

| Resource Usage | Efficient sharers: containers squeeze more apps onto the same hardware without hogging resources. | Resource hogs: each VM demands its own chunk of hardware and resources. | |

| Portability | The ultimate travel buddy! Containers run consistently anywhere: your laptop, the cloud, or even a spaceship. | Not so flexible: VMs can be moved, but they’re bulkier and require more effort to migrate. | |

| Infrastructure | Share the love! Containers share the host OS kernel, keeping things simple and streamlined. | Standalone giants: VMs come with their own OS, making them isolated but heavier. | |

| Use Case | Perfect for modern app development, microservices, and environments where speed and efficiency are key. | Ideal for running multiple different OSes or legacy applications that need full isolation. |

For instance, a Python app container packages the exact interpreter version, libraries like NumPy, and even OS dependencies (such as Ubuntu LTS) so that the “it works on my machine” excuse is history.

Visualizing the Kernel: How Containers Differ from VMs

An image of the kernel stack can illustrate the difference effectively:

- VM Stack: Hardware → Hypervisor → Guest OS (Kernel) → Application

- Container Stack: Hardware → Host OS (Shared Kernel) → Container → Application

The Key Differences: Kernel Sharing vs. Full OS Emulation

Virtual machines operate by emulating an entire operating system, each with its own kernel and system libraries. This duplication of resources leads to inefficiencies in memory and storage. Containers, on the other hand, share the host OS kernel, eliminating the need for duplicating the kernel logic. This shared kernel architecture enables containers to be lightweight and faster to start compared to VMs.

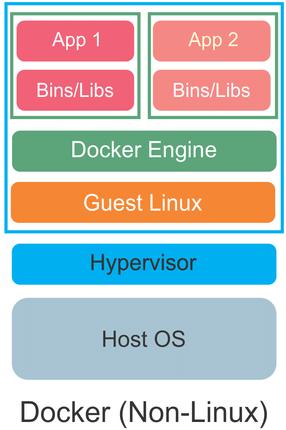

Example: Docker on Windows and the Role of WSL

Docker’s functionality on Windows highlights the importance of kernel sharing. Docker requires a Linux-based kernel to function efficiently. Since Windows uses a different kernel, Windows Subsystem for Linux (WSL) bridges the gap by emulating a Linux-compatible environment. This dependency demonstrates how critical the kernel is to container operations: containers rely on the host OS kernel to maintain their lightweight and efficient structure.

Here, “Guest Linux” represents the WSL that emulates the linux kernel for Docker to function properly.

The adoption of containers represents a leap in how applications are developed, deployed, and scaled, driven by their ability to simplify operations, save resources, and seamlessly integrate with modern development practices.

Scaling: From One User to a Million (and Beyond)

When your app goes viral, containers are your secret sauce to keep it humming. Here’s how they tackle scaling:

1. Rapid Deployment

Containers spin up faster than you can order a pizza. In a surge of users, auto-scaling systems can launch new containers in just a few seconds. Consider this Kubernetes auto-scaling configuration:

# Kubernetes auto-scaling config example

apiVersion: autoscaling/v2

kind: HorizontalPodAutoscaler

metadata:

name: my-app-autoscaler

spec:

scaleTargetRef:

apiVersion: apps/v1

kind: Deployment

name: my-app

minReplicas: 3

maxReplicas: 100

metrics:

- type: Resource

resource:

name: cpu

target:

type: Utilization

averageUtilization: 80

This code tells Kubernetes to add more containers when the CPU hits 80%, so your app handles the crowd without a sweat.

2. Microservices Architecture

Instead of one giant monolithic app, break it down into microservices. Each part, whether it’s the login service, payment gateway, or recommendation engine, runs in its own container. That way, if the login page gets hammered by a million users, you only scale that component, leaving the rest undisturbed.

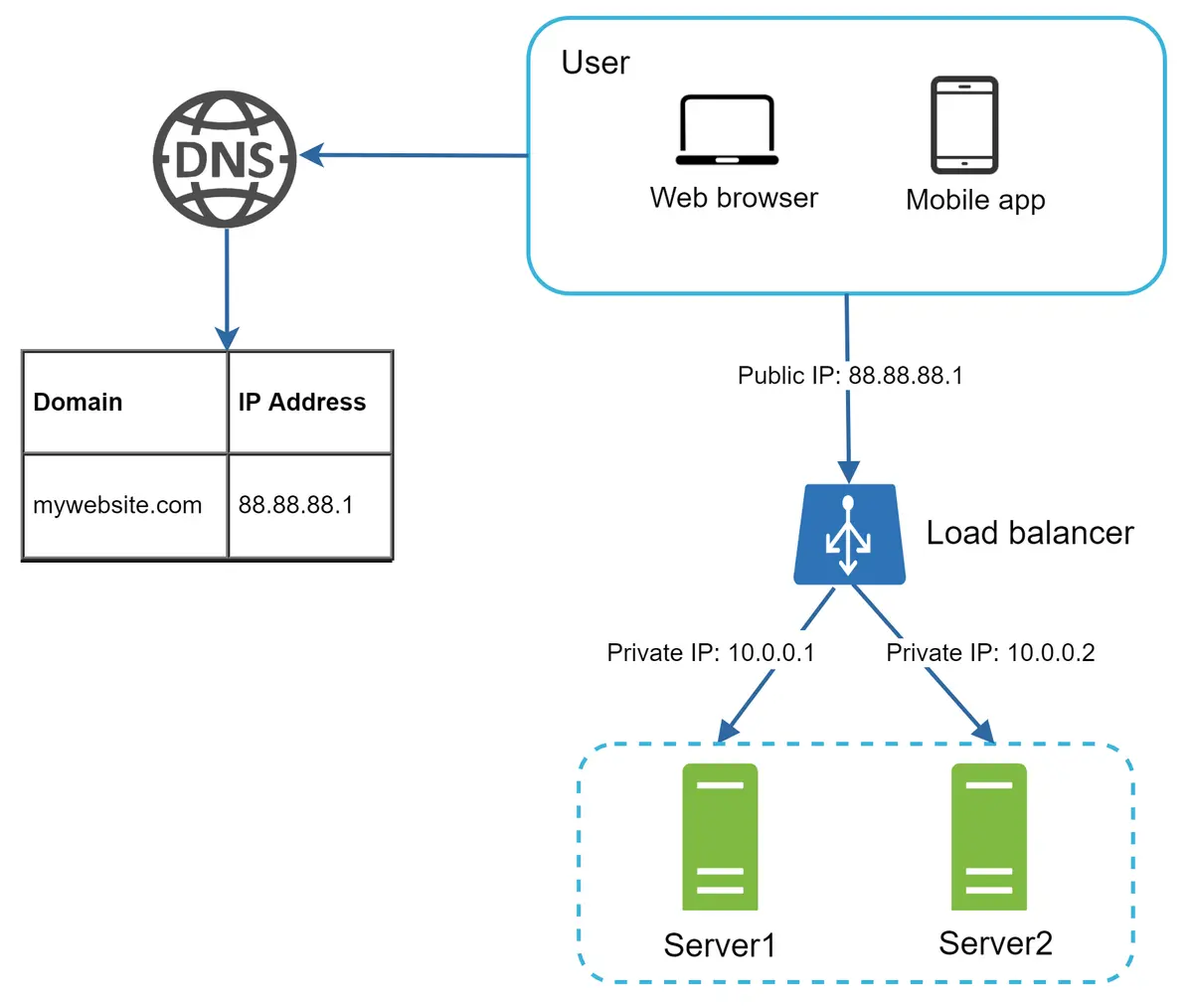

3. Load Balancing Made Easy

Distributing user requests among multiple container instances is like having several checkout counters at a bank. Tools like Kubernetes and HAProxy ensure that no single container is overwhelmed.

Kubernetes: The Symphony Conductor

If containers are the instruments, Kubernetes is the maestro orchestrating the symphony. Here’s what it brings to the table:

- Orchestration: Automates deployment, scaling, and management of containers.

- Auto-Healing: Quickly replaces any container that crashes.

- Rolling Updates: Seamlessly update your app without downtime.

- Resource Allocation: Prevents any single container from hogging resources.

Deploy your app effortlessly using Helm (Kubernetes’ package manager):

# Deploying with Helm

helm install my-app ./chart \

--set replicaCount=5 \

--set image.tag="v2.3.1"

Pro Tip: Try using K9s, the Kubernetes CLI that makes management feel like you’re in your own high-tech command center.

Securing Your Containerized Castle

While containers make scaling a breeze, they also come with security challenges. Here’s a quick rundown of common pitfalls and solutions:

| Risk | Solution | Tools |

|---|---|---|

| Bloated Images | Use multi-stage builds | Docker BuildKit |

| Secret Leaks | Store secrets securely | Kubernetes Secrets, Sealed Secrets |

| Zombie Containers | Set strict resource limits | cgroups |

| Vulnerability Scanning | Regular image scans | Trivy, Clair |

An improved Dockerfile might look like this:

# Stage 1: Builder

FROM python:3.11 as builder

COPY requirements.txt .

RUN pip install --user -r requirements.txt

# Stage 2: Runtime

FROM python:3.11-slim

COPY --from=builder /root/.local /root/.local

COPY . .

CMD ["python", "app.py"]

This approach trims down your image size dramatically, like swapping a moving truck for a compact carry-on!

CI/CD: The Container Assembly Line

Modern development pipelines are powered by containers. Here’s a typical flow:

- Commit Code: A developer pushes changes to GitHub.

- Build Container: CI tools like GitHub Actions generate a Docker image.

- Scan: Tools such as Trivy scan for vulnerabilities.

- Deploy: Continuous delivery systems like Argo CD push the update to Kubernetes.

- Monitor: Use Prometheus and Grafana to keep an eye on performance.

At Netflix, this process can deploy over a thousand updates daily, faster than you can binge your favorite series!

Real-World Patterns and Use Cases

Containers enable diverse application architectures. Consider these blueprints:

E-Commerce Stack

Frontend (React) → API Gateway →

Cart Service → Payment Service →

DB Proxy → Redis → PostgreSQL

Each component runs in its own container and scales independently, ensuring a smooth shopping experience during peak hours.

AI/ML Workflow

Data Ingestion → Preprocessing →

Model Training → API Serving

Containers not only streamline the workflow but also support GPU acceleration for rapid model training.

And for legacy systems, many enterprises use a “strangler pattern” to gradually containerize older applications, saving millions in cloud costs while modernizing their infrastructure.

But It’s Not All Sunshine and Rainbows

While containers are awesome, they come with their own challenges:

- Security Concerns: Poorly isolated containers can expose vulnerabilities. Always double-check your container images and follow best practices. Use tools like Trivy for vulnerability scanning.

- Complexity: Managing thousands of containers (without tools like Kubernetes) can be a nightmare. This is where service meshes like Istio come into play.

- Monitoring: Keeping an eye on container health and performance requires robust monitoring tools like Prometheus and Grafana.

The Future: Containers 2.0

What’s next in the container universe?

- Wasm Containers: Startup in milliseconds, faster than ever!

- eBPF Magic: Kernel-level networking unlocking 100Gbps speeds.

- Serverless Containers: Combining the flexibility of serverless with containerization.

Imagine deploying an app globally in just 10 seconds. The possibilities are as exciting as they are limitless.

Wrapping Up: Containers FTW!

From helping startups take off to supporting tech giants like Netflix, containerization has transformed the way we build and scale applications. It simplifies deployment, optimizes resource usage, and ensures your code runs consistently across different environments.

Whether you’re tinkering with code at home or designing the next big thing, containers give you the flexibility to scale effortlessly. So, when your app suddenly faces a flood of users, containers are there to keep things running smoothly.

Here’s to building fast, reliable, and scalable applications, one container at a time! 🚀

Write the code, embrace the efficiency, and let containerization propel your app from idea to millions of users, seamlessly and effortlessly!